# 4D Gaussian Splatting for Real-Time Dynamic Scene Rendering

## Arxiv Preprint

### [Project Page](https://guanjunwu.github.io/4dgs/index.html)| [Arxiv Paper](https://arxiv.org/abs/2310.08528)

[Guanjun Wu](https://guanjunwu.github.io/)1*, [Taoran Yi](https://github.com/taoranyi)2*,

[Jiemin Fang](https://jaminfong.cn/)3, [Lingxi Xie](http://lingxixie.com/)3, [Xiaopeng Zhang](https://sites.google.com/site/zxphistory/)3, [Wei Wei](https://www.eric-weiwei.com/)1,[Wenyu Liu](http://eic.hust.edu.cn/professor/liuwenyu/)2, [Qi Tian](https://scholar.google.com/citations?hl=en&user=61b6eYkAAAAJ)3 , [Xinggang Wang](https://xinggangw.info/)2✉1School of CS, HUST 2School of EIC, HUST 3Huawei Inc.

---------------------------------------------------

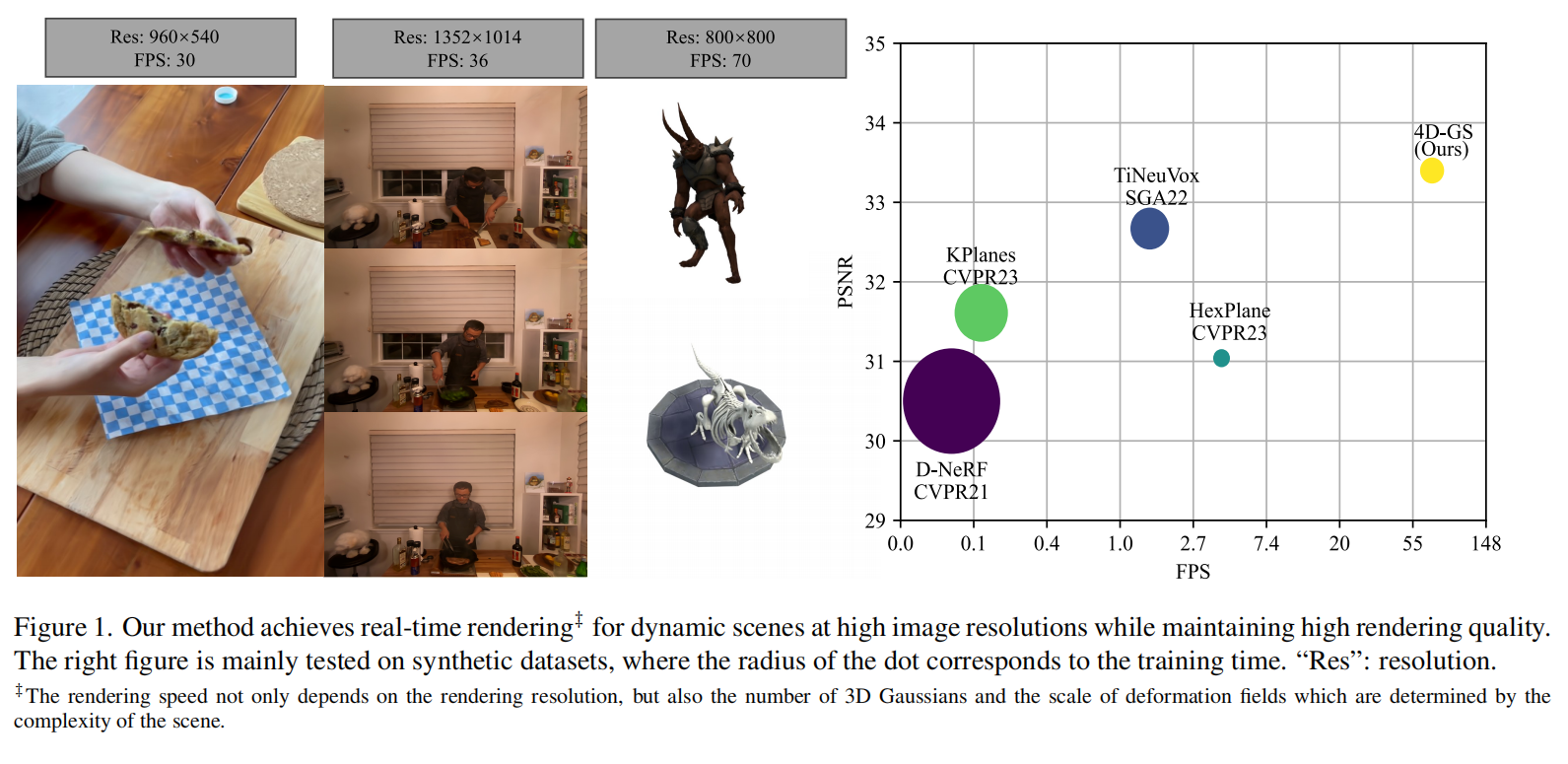

Our method converges very quickly. And achieves real-time rendering speed.

## Environmental Setups

Please follow the [3D-GS](https://github.com/graphdeco-inria/gaussian-splatting) to install the relative packages.

```bash

git clone https://github.com/hustvl/4DGaussians

cd 4DGaussians

conda create -n Gaussians4D python=3.7

conda activate Gaussians4D

pip install -r requirements.txt

cd submodules

git clone https://github.com/ingra14m/depth-diff-gaussian-rasterization

pip install -e depth-diff-gaussian-rasterization

```

In our environment, we use pytorch=1.13.1+cu116.

## Data Preparation

**For synthetic scenes:**

The dataset provided in [D-NeRF](https://github.com/albertpumarola/D-NeRF) is used. You can download the dataset from [dropbox](https://www.dropbox.com/s/0bf6fl0ye2vz3vr/data.zip?dl=0).

**For real dynamic scenes:**

The dataset provided in [HyperNeRF](https://github.com/google/hypernerf) is used. You can download scenes from [Hypernerf Dataset](https://github.com/google/hypernerf/releases/tag/v0.1) and organize them as [Nerfies](https://github.com/google/nerfies#datasets). Meanwhile, [Plenoptic Dataset](https://github.com/facebookresearch/Neural_3D_Video) could be downloaded from their offical websites, to save the memory, you should extract the frames of each video, them organize your dataset as follows.

```

├── data

│ | dnerf

│ ├── mutant

│ ├── standup

│ ├── ...

│ | hypernerf

│ ├── interp

│ ├── misc

│ ├── virg

│ | dynerf

│ ├── cook_spinach

│ ├── cam00

│ ├── images

│ ├── 0000.png

│ ├── 0001.png

│ ├── 0002.png

│ ├── ...

│ ├── cam01

│ ├── images

│ ├── 0000.png

│ ├── 0001.png

│ ├── ...

│ ├── cut_roasted_beef

| ├── ...

```

## Training

For training synthetic scenes such as `lego`, run

```

python train.py -s data/dnerf/bouncingballs --port 6017 --expname "dnerf/bouncingballs" --configs arguments/dnerf/bouncingballs.py

```

You can custom your training config through the config files.

## Rendering

Run the following script to render the images.

```

python render.py --model_path "output/dnerf/bouncingballs/" --skip_train --configs arguments/dnerf/bouncingballs.py &

```

## Evaluation

Run the following script to evaluate the model.

```

python metrics.py --model_path "output/dnerf/bouncingballs/"

```

## Scripts

There are some helpful scripts in `scripts/`, please feel free to use them.

---

Some source code of ours is borrowed from [3DGS](https://github.com/graphdeco-inria/gaussian-splatting), [k-planes](https://github.com/Giodiro/kplanes_nerfstudio),[HexPlane](https://github.com/Caoang327/HexPlane), [TiNeuVox](https://github.com/hustvl/TiNeuVox). We sincerely appreciate the excellent works of these authors.

## Acknowledgement

We would like to express our sincere gratitude to @zhouzhenghong-gt for his revisions to our code and discussions on the content of our paper.

## Citation

If you find this repository/work helpful in your research, welcome to cite the paper and give a ⭐.

```

@article{wu20234dgaussians,

title={4D Gaussian Splatting for Real-Time Dynamic Scene Rendering},

author={Wu, Guanjun and Yi, Taoran and Fang, Jiemin and Xie, Lingxi and Zhang, Xiaopeng and Wei Wei and Liu, Wenyu and Tian, Qi and Wang Xinggang},

journal={arXiv preprint arXiv:2310.08528},

year={2023}

}

```