Compare commits

30 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

745b2cafce | ||

|

|

6538ded65f | ||

|

|

fc5052b6da | ||

|

|

9a8aa07094 | ||

|

|

efccec0bd7 | ||

|

|

47fcdfd90f | ||

|

|

cdfcbd0a2b | ||

|

|

266bd83546 | ||

|

|

b238fbcd41 | ||

|

|

efdd1b3971 | ||

|

|

1e214c5be2 | ||

|

|

b3c3cc8f26 | ||

|

|

3668bfb14f | ||

|

|

bc8ff74e98 | ||

|

|

2367c156e2 | ||

|

|

494db746f7 | ||

|

|

94bfad8d27 | ||

|

|

685054c5bc | ||

|

|

7413f1354f | ||

|

|

14768a6ed6 | ||

|

|

664f304631 | ||

|

|

a8df33c0dc | ||

|

|

5a481b5022 | ||

|

|

2b6f581f9a | ||

|

|

e88b8baf19 | ||

|

|

8894d72e6b | ||

|

|

b36fb2dd73 | ||

|

|

9a0c16a126 | ||

|

|

4ef21545a4 | ||

|

|

4368eb2893 |

2

.github/ISSUE_TEMPLATE/config.yml

vendored

2

.github/ISSUE_TEMPLATE/config.yml

vendored

@ -1,5 +1,5 @@

|

||||

blank_issues_enabled: true

|

||||

contact_links:

|

||||

- name: Jan Discussions

|

||||

url: https://github.com/orgs/janhq/discussions/categories/q-a

|

||||

url: https://github.com/orgs/menloresearch/discussions/categories/q-a

|

||||

about: Get help, discuss features & roadmap, and share your projects

|

||||

|

||||

6

.github/workflows/jan-server-web-ci-dev.yml

vendored

6

.github/workflows/jan-server-web-ci-dev.yml

vendored

@ -12,7 +12,11 @@ jobs:

|

||||

build-and-preview:

|

||||

runs-on: [ubuntu-24-04-docker]

|

||||

env:

|

||||

<<<<<<< HEAD

|

||||

JAN_API_BASE: "https://api-dev.menlo.ai/v1"

|

||||

=======

|

||||

MENLO_PLATFORM_BASE_URL: "https://api-dev.jan.ai/v1"

|

||||

>>>>>>> c854c54c0 (chore: update api domain to jan.ai (#6832))

|

||||

permissions:

|

||||

pull-requests: write

|

||||

contents: write

|

||||

@ -52,7 +56,7 @@ jobs:

|

||||

|

||||

- name: Build docker image

|

||||

run: |

|

||||

docker build --build-arg MENLO_PLATFORM_BASE_URL=${{ env.MENLO_PLATFORM_BASE_URL }} -t ${{ steps.vars.outputs.FULL_IMAGE }} .

|

||||

docker build --build-arg JAN_API_BASE=${{ env.JAN_API_BASE }} -t ${{ steps.vars.outputs.FULL_IMAGE }} .

|

||||

|

||||

- name: Push docker image

|

||||

if: github.event_name == 'push'

|

||||

|

||||

2

.github/workflows/jan-server-web-ci-prod.yml

vendored

2

.github/workflows/jan-server-web-ci-prod.yml

vendored

@ -43,7 +43,7 @@ jobs:

|

||||

- name: Install dependencies

|

||||

run: make config-yarn && yarn install && yarn build:core && make build-web-app

|

||||

env:

|

||||

MENLO_PLATFORM_BASE_URL: ${{ env.MENLO_PLATFORM_BASE_URL }}

|

||||

JAN_API_BASE: ${{ env.JAN_API_BASE }}

|

||||

GA_MEASUREMENT_ID: ${{ env.GA_MEASUREMENT_ID }}

|

||||

|

||||

- name: Publish to Cloudflare Pages Production

|

||||

|

||||

6

.github/workflows/jan-server-web-ci-stag.yml

vendored

6

.github/workflows/jan-server-web-ci-stag.yml

vendored

@ -12,7 +12,11 @@ jobs:

|

||||

build-and-preview:

|

||||

runs-on: [ubuntu-24-04-docker]

|

||||

env:

|

||||

<<<<<<< HEAD

|

||||

JAN_API_BASE: "https://api-stag.menlo.ai/v1"

|

||||

=======

|

||||

MENLO_PLATFORM_BASE_URL: "https://api-stag.jan.ai/v1"

|

||||

>>>>>>> c854c54c0 (chore: update api domain to jan.ai (#6832))

|

||||

permissions:

|

||||

pull-requests: write

|

||||

contents: write

|

||||

@ -52,7 +56,7 @@ jobs:

|

||||

|

||||

- name: Build docker image

|

||||

run: |

|

||||

docker build --build-arg MENLO_PLATFORM_BASE_URL=${{ env.MENLO_PLATFORM_BASE_URL }} -t ${{ steps.vars.outputs.FULL_IMAGE }} .

|

||||

docker build --build-arg JAN_API_BASE=${{ env.JAN_API_BASE }} -t ${{ steps.vars.outputs.FULL_IMAGE }} .

|

||||

|

||||

- name: Push docker image

|

||||

if: github.event_name == 'push'

|

||||

|

||||

108

.github/workflows/jan-tauri-build-nightly.yaml

vendored

108

.github/workflows/jan-tauri-build-nightly.yaml

vendored

@ -168,62 +168,62 @@ jobs:

|

||||

AWS_DEFAULT_REGION: ${{ secrets.DELTA_AWS_REGION }}

|

||||

AWS_EC2_METADATA_DISABLED: 'true'

|

||||

|

||||

# noti-discord-nightly-and-update-url-readme:

|

||||

# needs:

|

||||

# [

|

||||

# build-macos,

|

||||

# build-windows-x64,

|

||||

# build-linux-x64,

|

||||

# get-update-version,

|

||||

# set-public-provider,

|

||||

# sync-temp-to-latest,

|

||||

# ]

|

||||

# secrets: inherit

|

||||

# if: github.event_name == 'schedule'

|

||||

# uses: ./.github/workflows/template-noti-discord-and-update-url-readme.yml

|

||||

# with:

|

||||

# ref: refs/heads/dev

|

||||

# build_reason: Nightly

|

||||

# push_to_branch: dev

|

||||

# new_version: ${{ needs.get-update-version.outputs.new_version }}

|

||||

noti-discord-nightly-and-update-url-readme:

|

||||

needs:

|

||||

[

|

||||

build-macos,

|

||||

build-windows-x64,

|

||||

build-linux-x64,

|

||||

get-update-version,

|

||||

set-public-provider,

|

||||

sync-temp-to-latest,

|

||||

]

|

||||

secrets: inherit

|

||||

if: github.event_name == 'schedule'

|

||||

uses: ./.github/workflows/template-noti-discord-and-update-url-readme.yml

|

||||

with:

|

||||

ref: refs/heads/dev

|

||||

build_reason: Nightly

|

||||

push_to_branch: dev

|

||||

new_version: ${{ needs.get-update-version.outputs.new_version }}

|

||||

|

||||

# noti-discord-pre-release-and-update-url-readme:

|

||||

# needs:

|

||||

# [

|

||||

# build-macos,

|

||||

# build-windows-x64,

|

||||

# build-linux-x64,

|

||||

# get-update-version,

|

||||

# set-public-provider,

|

||||

# sync-temp-to-latest,

|

||||

# ]

|

||||

# secrets: inherit

|

||||

# if: github.event_name == 'push'

|

||||

# uses: ./.github/workflows/template-noti-discord-and-update-url-readme.yml

|

||||

# with:

|

||||

# ref: refs/heads/dev

|

||||

# build_reason: Pre-release

|

||||

# push_to_branch: dev

|

||||

# new_version: ${{ needs.get-update-version.outputs.new_version }}

|

||||

noti-discord-pre-release-and-update-url-readme:

|

||||

needs:

|

||||

[

|

||||

build-macos,

|

||||

build-windows-x64,

|

||||

build-linux-x64,

|

||||

get-update-version,

|

||||

set-public-provider,

|

||||

sync-temp-to-latest,

|

||||

]

|

||||

secrets: inherit

|

||||

if: github.event_name == 'push'

|

||||

uses: ./.github/workflows/template-noti-discord-and-update-url-readme.yml

|

||||

with:

|

||||

ref: refs/heads/dev

|

||||

build_reason: Pre-release

|

||||

push_to_branch: dev

|

||||

new_version: ${{ needs.get-update-version.outputs.new_version }}

|

||||

|

||||

# noti-discord-manual-and-update-url-readme:

|

||||

# needs:

|

||||

# [

|

||||

# build-macos,

|

||||

# build-windows-x64,

|

||||

# build-linux-x64,

|

||||

# get-update-version,

|

||||

# set-public-provider,

|

||||

# sync-temp-to-latest,

|

||||

# ]

|

||||

# secrets: inherit

|

||||

# if: github.event_name == 'workflow_dispatch' && github.event.inputs.public_provider == 'aws-s3'

|

||||

# uses: ./.github/workflows/template-noti-discord-and-update-url-readme.yml

|

||||

# with:

|

||||

# ref: refs/heads/dev

|

||||

# build_reason: Manual

|

||||

# push_to_branch: dev

|

||||

# new_version: ${{ needs.get-update-version.outputs.new_version }}

|

||||

noti-discord-manual-and-update-url-readme:

|

||||

needs:

|

||||

[

|

||||

build-macos,

|

||||

build-windows-x64,

|

||||

build-linux-x64,

|

||||

get-update-version,

|

||||

set-public-provider,

|

||||

sync-temp-to-latest,

|

||||

]

|

||||

secrets: inherit

|

||||

if: github.event_name == 'workflow_dispatch' && github.event.inputs.public_provider == 'aws-s3'

|

||||

uses: ./.github/workflows/template-noti-discord-and-update-url-readme.yml

|

||||

with:

|

||||

ref: refs/heads/dev

|

||||

build_reason: Manual

|

||||

push_to_branch: dev

|

||||

new_version: ${{ needs.get-update-version.outputs.new_version }}

|

||||

|

||||

comment-pr-build-url:

|

||||

needs:

|

||||

|

||||

6

.github/workflows/jan-tauri-build.yaml

vendored

6

.github/workflows/jan-tauri-build.yaml

vendored

@ -82,11 +82,11 @@ jobs:

|

||||

VERSION=${{ needs.get-update-version.outputs.new_version }}

|

||||

PUB_DATE=$(date -u +"%Y-%m-%dT%H:%M:%S.%3NZ")

|

||||

LINUX_SIGNATURE="${{ needs.build-linux-x64.outputs.APPIMAGE_SIG }}"

|

||||

LINUX_URL="https://github.com/janhq/jan/releases/download/v${{ needs.get-update-version.outputs.new_version }}/${{ needs.build-linux-x64.outputs.APPIMAGE_FILE_NAME }}"

|

||||

LINUX_URL="https://github.com/menloresearch/jan/releases/download/v${{ needs.get-update-version.outputs.new_version }}/${{ needs.build-linux-x64.outputs.APPIMAGE_FILE_NAME }}"

|

||||

WINDOWS_SIGNATURE="${{ needs.build-windows-x64.outputs.WIN_SIG }}"

|

||||

WINDOWS_URL="https://github.com/janhq/jan/releases/download/v${{ needs.get-update-version.outputs.new_version }}/${{ needs.build-windows-x64.outputs.FILE_NAME }}"

|

||||

WINDOWS_URL="https://github.com/menloresearch/jan/releases/download/v${{ needs.get-update-version.outputs.new_version }}/${{ needs.build-windows-x64.outputs.FILE_NAME }}"

|

||||

DARWIN_SIGNATURE="${{ needs.build-macos.outputs.MAC_UNIVERSAL_SIG }}"

|

||||

DARWIN_URL="https://github.com/janhq/jan/releases/download/v${{ needs.get-update-version.outputs.new_version }}/${{ needs.build-macos.outputs.TAR_NAME }}"

|

||||

DARWIN_URL="https://github.com/menloresearch/jan/releases/download/v${{ needs.get-update-version.outputs.new_version }}/${{ needs.build-macos.outputs.TAR_NAME }}"

|

||||

|

||||

jq --arg version "$VERSION" \

|

||||

--arg pub_date "$PUB_DATE" \

|

||||

|

||||

@ -29,7 +29,7 @@ jobs:

|

||||

local max_retries=3

|

||||

local tag

|

||||

while [ $retries -lt $max_retries ]; do

|

||||

tag=$(curl -s https://api.github.com/repos/janhq/jan/releases/latest | jq -r .tag_name)

|

||||

tag=$(curl -s https://api.github.com/repos/menloresearch/jan/releases/latest | jq -r .tag_name)

|

||||

if [ -n "$tag" ] && [ "$tag" != "null" ]; then

|

||||

echo $tag

|

||||

return

|

||||

|

||||

@ -50,6 +50,6 @@ jobs:

|

||||

- macOS Universal: https://delta.jan.ai/nightly/Jan-nightly_{{ VERSION }}_universal.dmg

|

||||

- Linux Deb: https://delta.jan.ai/nightly/Jan-nightly_{{ VERSION }}_amd64.deb

|

||||

- Linux AppImage: https://delta.jan.ai/nightly/Jan-nightly_{{ VERSION }}_amd64.AppImage

|

||||

- Github action run: https://github.com/janhq/jan/actions/runs/{{ GITHUB_RUN_ID }}

|

||||

- Github action run: https://github.com/menloresearch/jan/actions/runs/{{ GITHUB_RUN_ID }}

|

||||

env:

|

||||

DISCORD_WEBHOOK: ${{ secrets.DISCORD_WEBHOOK }}

|

||||

|

||||

@ -49,8 +49,6 @@ jobs:

|

||||

# Update tauri.conf.json

|

||||

jq --arg version "${{ inputs.new_version }}" '.version = $version | .bundle.createUpdaterArtifacts = false' ./src-tauri/tauri.conf.json > /tmp/tauri.conf.json

|

||||

mv /tmp/tauri.conf.json ./src-tauri/tauri.conf.json

|

||||

jq '.bundle.windows.nsis.template = "tauri.bundle.windows.nsis.template"' ./src-tauri/tauri.windows.conf.json > /tmp/tauri.windows.conf.json

|

||||

mv /tmp/tauri.windows.conf.json ./src-tauri/tauri.windows.conf.json

|

||||

jq '.bundle.windows.signCommand = "echo External build - skipping signature: %1"' ./src-tauri/tauri.windows.conf.json > /tmp/tauri.windows.conf.json

|

||||

mv /tmp/tauri.windows.conf.json ./src-tauri/tauri.windows.conf.json

|

||||

jq --arg version "${{ inputs.new_version }}" '.version = $version' web-app/package.json > /tmp/package.json

|

||||

@ -82,36 +80,6 @@ jobs:

|

||||

echo "---------./src-tauri/Cargo.toml---------"

|

||||

cat ./src-tauri/Cargo.toml

|

||||

|

||||

generate_build_version() {

|

||||

### Examble

|

||||

### input 0.5.6 output will be 0.5.6 and 0.5.6.0

|

||||

### input 0.5.6-rc2-beta output will be 0.5.6 and 0.5.6.2

|

||||

### input 0.5.6-1213 output will be 0.5.6 and and 0.5.6.1213

|

||||

local new_version="$1"

|

||||

local base_version

|

||||

local t_value

|

||||

# Check if it has a "-"

|

||||

if [[ "$new_version" == *-* ]]; then

|

||||

base_version="${new_version%%-*}" # part before -

|

||||

suffix="${new_version#*-}" # part after -

|

||||

# Check if it is rcX-beta

|

||||

if [[ "$suffix" =~ ^rc([0-9]+)-beta$ ]]; then

|

||||

t_value="${BASH_REMATCH[1]}"

|

||||

else

|

||||

t_value="$suffix"

|

||||

fi

|

||||

else

|

||||

base_version="$new_version"

|

||||

t_value="0"

|

||||

fi

|

||||

# Export two values

|

||||

new_base_version="$base_version"

|

||||

new_build_version="${base_version}.${t_value}"

|

||||

}

|

||||

generate_build_version ${{ inputs.new_version }}

|

||||

sed -i "s/jan_version/$new_base_version/g" ./src-tauri/tauri.bundle.windows.nsis.template

|

||||

sed -i "s/jan_build/$new_build_version/g" ./src-tauri/tauri.bundle.windows.nsis.template

|

||||

|

||||

if [ "${{ inputs.channel }}" != "stable" ]; then

|

||||

jq '.plugins.updater.endpoints = ["https://delta.jan.ai/${{ inputs.channel }}/latest.json"]' ./src-tauri/tauri.conf.json > /tmp/tauri.conf.json

|

||||

mv /tmp/tauri.conf.json ./src-tauri/tauri.conf.json

|

||||

@ -135,14 +103,7 @@ jobs:

|

||||

chmod +x .github/scripts/rename-workspace.sh

|

||||

.github/scripts/rename-workspace.sh ./package.json ${{ inputs.channel }}

|

||||

cat ./package.json

|

||||

sed -i "s/jan_productname/Jan-${{ inputs.channel }}/g" ./src-tauri/tauri.bundle.windows.nsis.template

|

||||

sed -i "s/jan_mainbinaryname/jan-${{ inputs.channel }}/g" ./src-tauri/tauri.bundle.windows.nsis.template

|

||||

else

|

||||

sed -i "s/jan_productname/Jan/g" ./src-tauri/tauri.bundle.windows.nsis.template

|

||||

sed -i "s/jan_mainbinaryname/jan/g" ./src-tauri/tauri.bundle.windows.nsis.template

|

||||

fi

|

||||

echo "---------nsis.template---------"

|

||||

cat ./src-tauri/tauri.bundle.windows.nsis.template

|

||||

- name: Build app

|

||||

shell: bash

|

||||

run: |

|

||||

|

||||

@ -98,15 +98,9 @@ jobs:

|

||||

# Update tauri.conf.json

|

||||

jq --arg version "${{ inputs.new_version }}" '.version = $version | .bundle.createUpdaterArtifacts = true' ./src-tauri/tauri.conf.json > /tmp/tauri.conf.json

|

||||

mv /tmp/tauri.conf.json ./src-tauri/tauri.conf.json

|

||||

jq '.bundle.windows.nsis.template = "tauri.bundle.windows.nsis.template"' ./src-tauri/tauri.windows.conf.json > /tmp/tauri.windows.conf.json

|

||||

mv /tmp/tauri.windows.conf.json ./src-tauri/tauri.windows.conf.json

|

||||

jq --arg version "${{ inputs.new_version }}" '.version = $version' web-app/package.json > /tmp/package.json

|

||||

mv /tmp/package.json web-app/package.json

|

||||

|

||||

# Add sign commands to tauri.windows.conf.json

|

||||

jq '.bundle.windows.signCommand = "powershell -ExecutionPolicy Bypass -File ./sign.ps1 %1"' ./src-tauri/tauri.windows.conf.json > /tmp/tauri.windows.conf.json

|

||||

mv /tmp/tauri.windows.conf.json ./src-tauri/tauri.windows.conf.json

|

||||

|

||||

# Update tauri plugin versions

|

||||

|

||||

jq --arg version "${{ inputs.new_version }}" '.version = $version' ./src-tauri/plugins/tauri-plugin-hardware/package.json > /tmp/package.json

|

||||

@ -133,35 +127,9 @@ jobs:

|

||||

echo "---------./src-tauri/Cargo.toml---------"

|

||||

cat ./src-tauri/Cargo.toml

|

||||

|

||||

generate_build_version() {

|

||||

### Example

|

||||

### input 0.5.6 output will be 0.5.6 and 0.5.6.0

|

||||

### input 0.5.6-rc2-beta output will be 0.5.6 and 0.5.6.2

|

||||

### input 0.5.6-1213 output will be 0.5.6 and and 0.5.6.1213

|

||||

local new_version="$1"

|

||||

local base_version

|

||||

local t_value

|

||||

# Check if it has a "-"

|

||||

if [[ "$new_version" == *-* ]]; then

|

||||

base_version="${new_version%%-*}" # part before -

|

||||

suffix="${new_version#*-}" # part after -

|

||||

# Check if it is rcX-beta

|

||||

if [[ "$suffix" =~ ^rc([0-9]+)-beta$ ]]; then

|

||||

t_value="${BASH_REMATCH[1]}"

|

||||

else

|

||||

t_value="$suffix"

|

||||

fi

|

||||

else

|

||||

base_version="$new_version"

|

||||

t_value="0"

|

||||

fi

|

||||

# Export two values

|

||||

new_base_version="$base_version"

|

||||

new_build_version="${base_version}.${t_value}"

|

||||

}

|

||||

generate_build_version ${{ inputs.new_version }}

|

||||

sed -i "s/jan_version/$new_base_version/g" ./src-tauri/tauri.bundle.windows.nsis.template

|

||||

sed -i "s/jan_build/$new_build_version/g" ./src-tauri/tauri.bundle.windows.nsis.template

|

||||

# Add sign commands to tauri.windows.conf.json

|

||||

jq '.bundle.windows.signCommand = "powershell -ExecutionPolicy Bypass -File ./sign.ps1 %1"' ./src-tauri/tauri.windows.conf.json > /tmp/tauri.windows.conf.json

|

||||

mv /tmp/tauri.windows.conf.json ./src-tauri/tauri.windows.conf.json

|

||||

|

||||

echo "---------tauri.windows.conf.json---------"

|

||||

cat ./src-tauri/tauri.windows.conf.json

|

||||

@ -195,14 +163,7 @@ jobs:

|

||||

chmod +x .github/scripts/rename-workspace.sh

|

||||

.github/scripts/rename-workspace.sh ./package.json ${{ inputs.channel }}

|

||||

cat ./package.json

|

||||

sed -i "s/jan_productname/Jan-${{ inputs.channel }}/g" ./src-tauri/tauri.bundle.windows.nsis.template

|

||||

sed -i "s/jan_mainbinaryname/jan-${{ inputs.channel }}/g" ./src-tauri/tauri.bundle.windows.nsis.template

|

||||

else

|

||||

sed -i "s/jan_productname/Jan/g" ./src-tauri/tauri.bundle.windows.nsis.template

|

||||

sed -i "s/jan_mainbinaryname/jan/g" ./src-tauri/tauri.bundle.windows.nsis.template

|

||||

fi

|

||||

echo "---------nsis.template---------"

|

||||

cat ./src-tauri/tauri.bundle.windows.nsis.template

|

||||

|

||||

- name: Install AzureSignTool

|

||||

run: |

|

||||

@ -273,6 +234,8 @@ jobs:

|

||||

# Upload for tauri updater

|

||||

aws s3 cp ./${{ steps.metadata.outputs.FILE_NAME }} s3://${{ secrets.DELTA_AWS_S3_BUCKET_NAME }}/temp-${{ inputs.channel }}/${{ steps.metadata.outputs.FILE_NAME }}

|

||||

aws s3 cp ./${{ steps.metadata.outputs.FILE_NAME }}.sig s3://${{ secrets.DELTA_AWS_S3_BUCKET_NAME }}/temp-${{ inputs.channel }}/${{ steps.metadata.outputs.FILE_NAME }}.sig

|

||||

|

||||

aws s3 cp ./src-tauri/target/release/bundle/msi/${{ steps.metadata.outputs.MSI_FILE_NAME }} s3://${{ secrets.DELTA_AWS_S3_BUCKET_NAME }}/temp-${{ inputs.channel }}/${{ steps.metadata.outputs.MSI_FILE_NAME }}

|

||||

env:

|

||||

AWS_ACCESS_KEY_ID: ${{ secrets.DELTA_AWS_ACCESS_KEY_ID }}

|

||||

AWS_SECRET_ACCESS_KEY: ${{ secrets.DELTA_AWS_SECRET_ACCESS_KEY }}

|

||||

@ -289,3 +252,13 @@ jobs:

|

||||

asset_path: ./src-tauri/target/release/bundle/nsis/${{ steps.metadata.outputs.FILE_NAME }}

|

||||

asset_name: ${{ steps.metadata.outputs.FILE_NAME }}

|

||||

asset_content_type: application/octet-stream

|

||||

- name: Upload release assert if public provider is github

|

||||

if: inputs.public_provider == 'github'

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

uses: actions/upload-release-asset@v1.0.1

|

||||

with:

|

||||

upload_url: ${{ inputs.upload_url }}

|

||||

asset_path: ./src-tauri/target/release/bundle/msi/${{ steps.metadata.outputs.MSI_FILE_NAME }}

|

||||

asset_name: ${{ steps.metadata.outputs.MSI_FILE_NAME }}

|

||||

asset_content_type: application/octet-stream

|

||||

|

||||

@ -143,7 +143,7 @@ jan/

|

||||

|

||||

**Option 1: The Easy Way (Make)**

|

||||

```bash

|

||||

git clone https://github.com/janhq/jan

|

||||

git clone https://github.com/menloresearch/jan

|

||||

cd jan

|

||||

make dev

|

||||

```

|

||||

@ -152,8 +152,8 @@ make dev

|

||||

|

||||

### Reporting Bugs

|

||||

|

||||

- **Ensure the bug was not already reported** by searching on GitHub under [Issues](https://github.com/janhq/jan/issues)

|

||||

- If you're unable to find an open issue addressing the problem, [open a new one](https://github.com/janhq/jan/issues/new)

|

||||

- **Ensure the bug was not already reported** by searching on GitHub under [Issues](https://github.com/menloresearch/jan/issues)

|

||||

- If you're unable to find an open issue addressing the problem, [open a new one](https://github.com/menloresearch/jan/issues/new)

|

||||

- Include your system specs and error logs - it helps a ton

|

||||

|

||||

### Suggesting Enhancements

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

# Stage 1: Build stage with Node.js and Yarn v4

|

||||

FROM node:20-alpine AS builder

|

||||

|

||||

ARG MENLO_PLATFORM_BASE_URL=https://api-dev.menlo.ai/v1

|

||||

ENV MENLO_PLATFORM_BASE_URL=$MENLO_PLATFORM_BASE_URL

|

||||

ARG JAN_API_BASE=https://api-dev.jan.ai/v1

|

||||

ENV JAN_API_BASE=$JAN_API_BASE

|

||||

|

||||

# Install build dependencies

|

||||

RUN apk add --no-cache \

|

||||

@ -28,7 +28,6 @@ COPY ./Makefile ./Makefile

|

||||

COPY ./.* /

|

||||

COPY ./package.json ./package.json

|

||||

COPY ./yarn.lock ./yarn.lock

|

||||

COPY ./pre-install ./pre-install

|

||||

COPY ./core ./core

|

||||

|

||||

# Build web application

|

||||

|

||||

1

Makefile

1

Makefile

@ -117,6 +117,7 @@ lint: install-and-build

|

||||

test: lint

|

||||

yarn download:bin

|

||||

ifeq ($(OS),Windows_NT)

|

||||

yarn download:windows-installer

|

||||

endif

|

||||

yarn test

|

||||

yarn copy:assets:tauri

|

||||

|

||||

16

README.md

16

README.md

@ -4,10 +4,10 @@

|

||||

|

||||

<p align="center">

|

||||

<!-- ALL-CONTRIBUTORS-BADGE:START - Do not remove or modify this section -->

|

||||

<img alt="GitHub commit activity" src="https://img.shields.io/github/commit-activity/m/janhq/jan"/>

|

||||

<img alt="Github Last Commit" src="https://img.shields.io/github/last-commit/janhq/jan"/>

|

||||

<img alt="Github Contributors" src="https://img.shields.io/github/contributors/janhq/jan"/>

|

||||

<img alt="GitHub closed issues" src="https://img.shields.io/github/issues-closed/janhq/jan"/>

|

||||

<img alt="GitHub commit activity" src="https://img.shields.io/github/commit-activity/m/menloresearch/jan"/>

|

||||

<img alt="Github Last Commit" src="https://img.shields.io/github/last-commit/menloresearch/jan"/>

|

||||

<img alt="Github Contributors" src="https://img.shields.io/github/contributors/menloresearch/jan"/>

|

||||

<img alt="GitHub closed issues" src="https://img.shields.io/github/issues-closed/menloresearch/jan"/>

|

||||

<img alt="Discord" src="https://img.shields.io/discord/1107178041848909847?label=discord"/>

|

||||

</p>

|

||||

|

||||

@ -15,7 +15,7 @@

|

||||

<a href="https://www.jan.ai/docs/desktop">Getting Started</a>

|

||||

- <a href="https://discord.gg/Exe46xPMbK">Community</a>

|

||||

- <a href="https://jan.ai/changelog">Changelog</a>

|

||||

- <a href="https://github.com/janhq/jan/issues">Bug reports</a>

|

||||

- <a href="https://github.com/menloresearch/jan/issues">Bug reports</a>

|

||||

</p>

|

||||

|

||||

Jan is bringing the best of open-source AI in an easy-to-use product. Download and run LLMs with **full control** and **privacy**.

|

||||

@ -48,7 +48,7 @@ The easiest way to get started is by downloading one of the following versions f

|

||||

</table>

|

||||

|

||||

|

||||

Download from [jan.ai](https://jan.ai/) or [GitHub Releases](https://github.com/janhq/jan/releases).

|

||||

Download from [jan.ai](https://jan.ai/) or [GitHub Releases](https://github.com/menloresearch/jan/releases).

|

||||

|

||||

## Features

|

||||

|

||||

@ -73,7 +73,7 @@ For those who enjoy the scenic route:

|

||||

### Run with Make

|

||||

|

||||

```bash

|

||||

git clone https://github.com/janhq/jan

|

||||

git clone https://github.com/menloresearch/jan

|

||||

cd jan

|

||||

make dev

|

||||

```

|

||||

@ -128,7 +128,7 @@ Contributions welcome. See [CONTRIBUTING.md](CONTRIBUTING.md) for the full spiel

|

||||

|

||||

## Contact

|

||||

|

||||

- **Bugs**: [GitHub Issues](https://github.com/janhq/jan/issues)

|

||||

- **Bugs**: [GitHub Issues](https://github.com/menloresearch/jan/issues)

|

||||

- **Business**: hello@jan.ai

|

||||

- **Jobs**: hr@jan.ai

|

||||

- **General Discussion**: [Discord](https://discord.gg/FTk2MvZwJH)

|

||||

|

||||

40

WEB_VERSION_TRACKER.md

Normal file

40

WEB_VERSION_TRACKER.md

Normal file

@ -0,0 +1,40 @@

|

||||

# Jan Web Version Tracker

|

||||

|

||||

Internal tracker for web component changes and features.

|

||||

|

||||

## v0.0.12 (Current)

|

||||

**Release Date**: 2025-10-02

|

||||

**Commit SHA**: df145d63a93bd27336b5b539ce0719fe9c7719e3

|

||||

|

||||

**Main Features**:

|

||||

- Search button instead of tools

|

||||

- Projects support properly for local used

|

||||

- Temporary chat mode

|

||||

- Performance enhancement: prevent thread items over fetching on app start

|

||||

- Fix Google Tag

|

||||

|

||||

## v0.0.11

|

||||

**Release Date**: 2025-09-23

|

||||

**Commit SHA**: 494db746f7dd1f51241cec80bbf550901a0115e5

|

||||

|

||||

**Main Features**:

|

||||

- Google login support

|

||||

- Remote conversation and message persistent

|

||||

- UI improvements

|

||||

- Multiple tab synchronization on browser

|

||||

|

||||

## v0.0.10

|

||||

|

||||

**Release Date**: 2025-09-11

|

||||

**Commit SHA**: b5b6e1dc197378d06ccbf127f60e44779f1e44e5

|

||||

|

||||

**Main Features**:

|

||||

- Chat interface with completion route support

|

||||

- MCP (Model Context Protocol) integration

|

||||

- Core web functionality for Jan AI

|

||||

|

||||

**Changes**:

|

||||

- Initial web version release

|

||||

- Basic chat completion API integration

|

||||

- MCP server support for tool calling

|

||||

- Web-optimized UI components

|

||||

@ -1,7 +1,7 @@

|

||||

# Core dependencies

|

||||

cua-computer[all]~=0.3.5

|

||||

cua-agent[all]~=0.3.0

|

||||

cua-agent @ git+https://github.com/janhq/cua.git@compute-agent-0.3.0-patch#subdirectory=libs/python/agent

|

||||

cua-agent @ git+https://github.com/menloresearch/cua.git@compute-agent-0.3.0-patch#subdirectory=libs/python/agent

|

||||

|

||||

# ReportPortal integration

|

||||

reportportal-client~=5.6.5

|

||||

|

||||

@ -25,8 +25,8 @@ export RANLIB_aarch64_linux_android="$NDK_HOME/toolchains/llvm/prebuilt/darwin-x

|

||||

# Additional environment variables for Rust cross-compilation

|

||||

export CARGO_TARGET_AARCH64_LINUX_ANDROID_LINKER="$NDK_HOME/toolchains/llvm/prebuilt/darwin-x86_64/bin/aarch64-linux-android21-clang"

|

||||

|

||||

# Only set global CC and AR for Android builds (when IS_ANDROID is set)

|

||||

if [ "$IS_ANDROID" = "true" ]; then

|

||||

# Only set global CC and AR for Android builds (when TAURI_ANDROID_BUILD is set)

|

||||

if [ "$TAURI_ANDROID_BUILD" = "true" ]; then

|

||||

export CC="$NDK_HOME/toolchains/llvm/prebuilt/darwin-x86_64/bin/aarch64-linux-android21-clang"

|

||||

export AR="$NDK_HOME/toolchains/llvm/prebuilt/darwin-x86_64/bin/llvm-ar"

|

||||

echo "Global CC and AR set for Android build"

|

||||

|

||||

@ -13,7 +13,7 @@ import * as core from '@janhq/core'

|

||||

|

||||

## Build an Extension

|

||||

|

||||

1. Download an extension template, for example, [https://github.com/janhq/extension-template](https://github.com/janhq/extension-template).

|

||||

1. Download an extension template, for example, [https://github.com/menloresearch/extension-template](https://github.com/menloresearch/extension-template).

|

||||

|

||||

2. Update the source code:

|

||||

|

||||

|

||||

@ -31,7 +31,7 @@

|

||||

"@vitest/coverage-v8": "^2.1.8",

|

||||

"@vitest/ui": "^2.1.8",

|

||||

"eslint": "8.57.0",

|

||||

"happy-dom": "^20.0.0",

|

||||

"happy-dom": "^15.11.6",

|

||||

"pacote": "^21.0.0",

|

||||

"react": "19.0.0",

|

||||

"request": "^2.88.2",

|

||||

|

||||

@ -11,8 +11,6 @@ export enum ExtensionTypeEnum {

|

||||

HuggingFace = 'huggingFace',

|

||||

Engine = 'engine',

|

||||

Hardware = 'hardware',

|

||||

RAG = 'rag',

|

||||

VectorDB = 'vectorDB',

|

||||

}

|

||||

|

||||

export interface ExtensionType {

|

||||

|

||||

@ -182,7 +182,6 @@ export interface SessionInfo {

|

||||

port: number // llama-server output port (corrected from portid)

|

||||

model_id: string //name of the model

|

||||

model_path: string // path of the loaded model

|

||||

is_embedding: boolean

|

||||

api_key: string

|

||||

mmproj_path?: string

|

||||

}

|

||||

|

||||

@ -23,8 +23,3 @@ export { MCPExtension } from './mcp'

|

||||

* Base AI Engines.

|

||||

*/

|

||||

export * from './engines'

|

||||

|

||||

export { RAGExtension, RAG_INTERNAL_SERVER } from './rag'

|

||||

export type { AttachmentInput, IngestAttachmentsResult } from './rag'

|

||||

export { VectorDBExtension } from './vector-db'

|

||||

export type { SearchMode, VectorDBStatus, VectorChunkInput, VectorSearchResult, AttachmentFileInfo, VectorDBFileInput, VectorDBIngestOptions } from './vector-db'

|

||||

|

||||

@ -1,36 +0,0 @@

|

||||

import { BaseExtension, ExtensionTypeEnum } from '../extension'

|

||||

import type { MCPTool, MCPToolCallResult } from '../../types'

|

||||

import type { AttachmentFileInfo } from './vector-db'

|

||||

|

||||

export interface AttachmentInput {

|

||||

path: string

|

||||

name?: string

|

||||

type?: string

|

||||

size?: number

|

||||

}

|

||||

|

||||

export interface IngestAttachmentsResult {

|

||||

filesProcessed: number

|

||||

chunksInserted: number

|

||||

files: AttachmentFileInfo[]

|

||||

}

|

||||

|

||||

export const RAG_INTERNAL_SERVER = 'rag-internal'

|

||||

|

||||

/**

|

||||

* RAG extension base: exposes RAG tools and orchestration API.

|

||||

*/

|

||||

export abstract class RAGExtension extends BaseExtension {

|

||||

type(): ExtensionTypeEnum | undefined {

|

||||

return ExtensionTypeEnum.RAG

|

||||

}

|

||||

|

||||

abstract getTools(): Promise<MCPTool[]>

|

||||

/**

|

||||

* Lightweight list of tool names for quick routing/lookup.

|

||||

*/

|

||||

abstract getToolNames(): Promise<string[]>

|

||||

abstract callTool(toolName: string, args: Record<string, unknown>): Promise<MCPToolCallResult>

|

||||

|

||||

abstract ingestAttachments(threadId: string, files: AttachmentInput[]): Promise<IngestAttachmentsResult>

|

||||

}

|

||||

@ -1,82 +0,0 @@

|

||||

import { BaseExtension, ExtensionTypeEnum } from '../extension'

|

||||

|

||||

export type SearchMode = 'auto' | 'ann' | 'linear'

|

||||

|

||||

export interface VectorDBStatus {

|

||||

ann_available: boolean

|

||||

}

|

||||

|

||||

export interface VectorChunkInput {

|

||||

text: string

|

||||

embedding: number[]

|

||||

}

|

||||

|

||||

export interface VectorSearchResult {

|

||||

id: string

|

||||

text: string

|

||||

score?: number

|

||||

file_id: string

|

||||

chunk_file_order: number

|

||||

}

|

||||

|

||||

export interface AttachmentFileInfo {

|

||||

id: string

|

||||

name?: string

|

||||

path?: string

|

||||

type?: string

|

||||

size?: number

|

||||

chunk_count: number

|

||||

}

|

||||

|

||||

// High-level input types for file ingestion

|

||||

export interface VectorDBFileInput {

|

||||

path: string

|

||||

name?: string

|

||||

type?: string

|

||||

size?: number

|

||||

}

|

||||

|

||||

export interface VectorDBIngestOptions {

|

||||

chunkSize: number

|

||||

chunkOverlap: number

|

||||

}

|

||||

|

||||

/**

|

||||

* Vector DB extension base: abstraction over local vector storage and search.

|

||||

*/

|

||||

export abstract class VectorDBExtension extends BaseExtension {

|

||||

type(): ExtensionTypeEnum | undefined {

|

||||

return ExtensionTypeEnum.VectorDB

|

||||

}

|

||||

|

||||

abstract getStatus(): Promise<VectorDBStatus>

|

||||

abstract createCollection(threadId: string, dimension: number): Promise<void>

|

||||

abstract insertChunks(

|

||||

threadId: string,

|

||||

fileId: string,

|

||||

chunks: VectorChunkInput[]

|

||||

): Promise<void>

|

||||

abstract ingestFile(

|

||||

threadId: string,

|

||||

file: VectorDBFileInput,

|

||||

opts: VectorDBIngestOptions

|

||||

): Promise<AttachmentFileInfo>

|

||||

abstract searchCollection(

|

||||

threadId: string,

|

||||

query_embedding: number[],

|

||||

limit: number,

|

||||

threshold: number,

|

||||

mode?: SearchMode,

|

||||

fileIds?: string[]

|

||||

): Promise<VectorSearchResult[]>

|

||||

abstract deleteChunks(threadId: string, ids: string[]): Promise<void>

|

||||

abstract deleteFile(threadId: string, fileId: string): Promise<void>

|

||||

abstract deleteCollection(threadId: string): Promise<void>

|

||||

abstract listAttachments(threadId: string, limit?: number): Promise<AttachmentFileInfo[]>

|

||||

abstract getChunks(

|

||||

threadId: string,

|

||||

fileId: string,

|

||||

startOrder: number,

|

||||

endOrder: number

|

||||

): Promise<VectorSearchResult[]>

|

||||

}

|

||||

@ -12,8 +12,6 @@ export type SettingComponentProps = {

|

||||

extensionName?: string

|

||||

requireModelReload?: boolean

|

||||

configType?: ConfigType

|

||||

titleKey?: string

|

||||

descriptionKey?: string

|

||||

}

|

||||

|

||||

export type ConfigType = 'runtime' | 'setting'

|

||||

|

||||

@ -18,7 +18,7 @@ We try to **keep routes consistent** to maintain SEO.

|

||||

|

||||

## How to Contribute

|

||||

|

||||

Refer to the [Contributing Guide](https://github.com/janhq/jan/blob/main/CONTRIBUTING.md) for more comprehensive information on how to contribute to the Jan project.

|

||||

Refer to the [Contributing Guide](https://github.com/menloresearch/jan/blob/main/CONTRIBUTING.md) for more comprehensive information on how to contribute to the Jan project.

|

||||

|

||||

### Pre-requisites and Installation

|

||||

|

||||

|

||||

Binary file not shown.

|

Before Width: | Height: | Size: 262 KiB |

@ -1581,7 +1581,7 @@

|

||||

},

|

||||

"cover": {

|

||||

"type": "string",

|

||||

"example": "https://raw.githubusercontent.com/janhq/jan/main/models/trinity-v1.2-7b/cover.png"

|

||||

"example": "https://raw.githubusercontent.com/menloresearch/jan/main/models/trinity-v1.2-7b/cover.png"

|

||||

},

|

||||

"engine": {

|

||||

"type": "string",

|

||||

|

||||

@ -27,7 +27,7 @@ export const APIReference = () => {

|

||||

<ApiReferenceReact

|

||||

configuration={{

|

||||

spec: {

|

||||

url: 'https://raw.githubusercontent.com/janhq/docs/main/public/openapi/jan.json',

|

||||

url: 'https://raw.githubusercontent.com/menloresearch/docs/main/public/openapi/jan.json',

|

||||

},

|

||||

theme: 'alternate',

|

||||

hideModels: true,

|

||||

|

||||

@ -57,7 +57,7 @@ const Changelog = () => {

|

||||

<p className="text-base mt-2 leading-relaxed">

|

||||

Latest release updates from the Jan team. Check out our

|

||||

<a

|

||||

href="https://github.com/orgs/janhq/projects/30"

|

||||

href="https://github.com/orgs/menloresearch/projects/30"

|

||||

className="text-blue-600 dark:text-blue-400 cursor-pointer"

|

||||

>

|

||||

Roadmap

|

||||

@ -150,7 +150,7 @@ const Changelog = () => {

|

||||

|

||||

<div className="text-center">

|

||||

<Link

|

||||

href="https://github.com/janhq/jan/releases"

|

||||

href="https://github.com/menloresearch/jan/releases"

|

||||

target="_blank"

|

||||

className="dark:nx-bg-neutral-900 dark:text-white bg-black text-white hover:text-white justify-center dark:border dark:border-neutral-800 flex-shrink-0 px-4 py-3 rounded-xl inline-flex items-center"

|

||||

>

|

||||

|

||||

@ -72,7 +72,7 @@ export default function CardDownload({ lastRelease }: Props) {

|

||||

|

||||

return {

|

||||

...system,

|

||||

href: `https://github.com/janhq/jan/releases/download/${lastRelease.tag_name}/${downloadUrl}`,

|

||||

href: `https://github.com/menloresearch/jan/releases/download/${lastRelease.tag_name}/${downloadUrl}`,

|

||||

size: asset ? formatFileSize(asset.size) : undefined,

|

||||

}

|

||||

})

|

||||

|

||||

@ -139,7 +139,7 @@ const DropdownDownload = ({ lastRelease }: Props) => {

|

||||

|

||||

return {

|

||||

...system,

|

||||

href: `https://github.com/janhq/jan/releases/download/${lastRelease.tag_name}/${downloadUrl}`,

|

||||

href: `https://github.com/menloresearch/jan/releases/download/${lastRelease.tag_name}/${downloadUrl}`,

|

||||

size: asset ? formatFileSize(asset.size) : undefined,

|

||||

}

|

||||

})

|

||||

|

||||

@ -23,7 +23,7 @@ const BuiltWithLove = () => {

|

||||

</div>

|

||||

<div className="flex flex-col lg:flex-row gap-8 mt-8 items-center justify-center">

|

||||

<a

|

||||

href="https://github.com/janhq/jan"

|

||||

href="https://github.com/menloresearch/jan"

|

||||

target="_blank"

|

||||

className="dark:bg-white bg-black inline-flex w-56 px-4 py-3 rounded-xl cursor-pointer justify-center items-start space-x-4 "

|

||||

>

|

||||

|

||||

@ -44,7 +44,7 @@ const Hero = () => {

|

||||

<div className="mt-10 text-center">

|

||||

<div>

|

||||

<Link

|

||||

href="https://github.com/janhq/jan/releases"

|

||||

href="https://github.com/menloresearch/jan/releases"

|

||||

target="_blank"

|

||||

className="hidden lg:inline-block"

|

||||

>

|

||||

|

||||

@ -95,7 +95,7 @@ const Home = () => {

|

||||

<div className="container mx-auto relative z-10">

|

||||

<div className="flex justify-center items-center mt-14 lg:mt-20 px-4">

|

||||

<a

|

||||

href={`https://github.com/janhq/jan/releases/tag/${lastVersion}`}

|

||||

href={`https://github.com/menloresearch/jan/releases/tag/${lastVersion}`}

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

className="bg-black/40 px-3 lg:px-4 rounded-full h-10 inline-flex items-center max-w-full animate-fade-in delay-100"

|

||||

@ -270,7 +270,7 @@ const Home = () => {

|

||||

data-delay="600"

|

||||

>

|

||||

<a

|

||||

href="https://github.com/janhq/jan"

|

||||

href="https://github.com/menloresearch/jan"

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

>

|

||||

@ -387,7 +387,7 @@ const Home = () => {

|

||||

</div>

|

||||

<a

|

||||

className="hidden md:block"

|

||||

href="https://github.com/janhq/jan"

|

||||

href="https://github.com/menloresearch/jan"

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

>

|

||||

@ -413,7 +413,7 @@ const Home = () => {

|

||||

</p>

|

||||

<a

|

||||

className="md:hidden mt-4 block w-full"

|

||||

href="https://github.com/janhq/jan"

|

||||

href="https://github.com/menloresearch/jan"

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

>

|

||||

|

||||

@ -95,7 +95,7 @@ const Navbar = ({ noScroll }: { noScroll?: boolean }) => {

|

||||

})}

|

||||

<li>

|

||||

<a

|

||||

href="https://github.com/janhq/jan/releases/latest"

|

||||

href="https://github.com/menloresearch/jan/releases/latest"

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

>

|

||||

@ -141,7 +141,7 @@ const Navbar = ({ noScroll }: { noScroll?: boolean }) => {

|

||||

<FaLinkedinIn className="size-5" />

|

||||

</a>

|

||||

<a

|

||||

href="https://github.com/janhq/jan"

|

||||

href="https://github.com/menloresearch/jan"

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

className="rounded-lg flex items-center justify-center"

|

||||

@ -156,7 +156,7 @@ const Navbar = ({ noScroll }: { noScroll?: boolean }) => {

|

||||

{/* Mobile Download Button and Hamburger */}

|

||||

<div className="lg:hidden flex items-center gap-3">

|

||||

<a

|

||||

href="https://github.com/janhq/jan/releases/latest"

|

||||

href="https://github.com/menloresearch/jan/releases/latest"

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

>

|

||||

@ -278,7 +278,7 @@ const Navbar = ({ noScroll }: { noScroll?: boolean }) => {

|

||||

<FaLinkedinIn className="size-5" />

|

||||

</a>

|

||||

<a

|

||||

href="https://github.com/janhq/jan"

|

||||

href="https://github.com/menloresearch/jan"

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

className="text-black rounded-lg flex items-center justify-center"

|

||||

@ -296,7 +296,7 @@ const Navbar = ({ noScroll }: { noScroll?: boolean }) => {

|

||||

asChild

|

||||

>

|

||||

<a

|

||||

href="https://github.com/janhq/jan/releases/latest"

|

||||

href="https://github.com/menloresearch/jan/releases/latest"

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

>

|

||||

|

||||

@ -120,7 +120,7 @@ export function DropdownButton({

|

||||

|

||||

return {

|

||||

...option,

|

||||

href: `https://github.com/janhq/jan/releases/download/${lastRelease.tag_name}/${fileName}`,

|

||||

href: `https://github.com/menloresearch/jan/releases/download/${lastRelease.tag_name}/${fileName}`,

|

||||

size: asset ? formatFileSize(asset.size) : 'N/A',

|

||||

}

|

||||

})

|

||||

|

||||

@ -18,7 +18,7 @@ description: Development setup, workflow, and contribution guidelines for Jan Se

|

||||

|

||||

1. **Clone Repository**

|

||||

```bash

|

||||

git clone https://github.com/janhq/jan-server

|

||||

git clone https://github.com/menloresearch/jan-server

|

||||

cd jan-server

|

||||

```

|

||||

|

||||

|

||||

@ -19,7 +19,7 @@ Jan Server currently supports minikube for local development. Production Kuberne

|

||||

|

||||

1. **Clone the repository**

|

||||

```bash

|

||||

git clone https://github.com/janhq/jan-server

|

||||

git clone https://github.com/menloresearch/jan-server

|

||||

cd jan-server

|

||||

```

|

||||

|

||||

|

||||

@ -24,4 +24,4 @@ Fixes 💫

|

||||

|

||||

Update your product or download the latest: https://jan.ai

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.5).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.5).

|

||||

@ -24,4 +24,4 @@ Jan now supports Mistral's new model Codestral. Thanks [Bartowski](https://huggi

|

||||

|

||||

More GGUF models can run in Jan - we rebased to llama.cpp b3012.Big thanks to [ggerganov](https://github.com/ggerganov)

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.0).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.0).

|

||||

|

||||

@ -28,4 +28,4 @@ Jan now understands LaTeX, allowing users to process and understand complex math

|

||||

|

||||

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.4.12).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.4.12).

|

||||

|

||||

@ -28,4 +28,4 @@ Users can now connect to OpenAI's new model GPT-4o.

|

||||

|

||||

|

||||

|

||||

For more details, see the [GitHub release notes.](https://github.com/janhq/jan/releases/tag/v0.4.13)

|

||||

For more details, see the [GitHub release notes.](https://github.com/menloresearch/jan/releases/tag/v0.4.13)

|

||||

|

||||

@ -16,4 +16,4 @@ More GGUF models can run in Jan - we rebased to llama.cpp b2961.

|

||||

|

||||

Huge shoutouts to [ggerganov](https://github.com/ggerganov) and contributors for llama.cpp, and [Bartowski](https://huggingface.co/bartowski) for GGUF models.

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.4.14).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.4.14).

|

||||

|

||||

@ -26,4 +26,4 @@ We've updated to llama.cpp b3088 for better performance - thanks to [GG](https:/

|

||||

- Reduced chat font weight (back to normal!)

|

||||

- Restored the maximize button

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.1).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.1).

|

||||

|

||||

@ -32,4 +32,4 @@ We've restored the tooltip hover functionality, which makes it easier to access

|

||||

|

||||

The right-click options for thread settings are now fully operational again. You can now manage your threads with this fix.

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.2).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.2).

|

||||

|

||||

@ -23,4 +23,4 @@ We've been working on stability issues over the last few weeks. Jan is now more

|

||||

- Fixed the GPU memory utilization bar

|

||||

- Some UX and copy improvements

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.3).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.3).

|

||||

|

||||

@ -32,4 +32,4 @@ Switching between threads used to reset your instruction settings. That’s fixe

|

||||

### Minor UI Tweaks & Bug Fixes

|

||||

We’ve also resolved issues with the input slider on the right panel and tackled several smaller bugs to keep everything running smoothly.

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.4).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.4).

|

||||

|

||||

@ -23,4 +23,4 @@ Fixes 💫

|

||||

|

||||

Update your product or download the latest: https://jan.ai

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.7).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.7).

|

||||

@ -22,4 +22,4 @@ Jan v0.5.9 is here: fixing what needed fixing

|

||||

|

||||

Update your product or download the latest: https://jan.ai

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.9).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.9).

|

||||

@ -22,4 +22,4 @@ and various UI/UX enhancements 💫

|

||||

|

||||

Update your product or download the latest: https://jan.ai

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.8).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.8).

|

||||

@ -19,4 +19,4 @@ Jan v0.5.10 is live: Jan is faster, smoother, and more reliable.

|

||||

|

||||

Update your product or download the latest: https://jan.ai

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.10).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.10).

|

||||

@ -23,4 +23,4 @@ Jan v0.5.11 is here - critical issues fixed, Mac installation updated.

|

||||

|

||||

Update your product or download the latest: https://jan.ai

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.11).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.11).

|

||||

@ -25,4 +25,4 @@ Jan v0.5.11 is here - critical issues fixed, Mac installation updated.

|

||||

|

||||

Update your product or download the latest: https://jan.ai

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.12).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.12).

|

||||

@ -20,4 +20,4 @@ import ChangelogHeader from "@/components/Changelog/ChangelogHeader"

|

||||

|

||||

Update your product or download the latest: https://jan.ai

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.13).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.13).

|

||||

|

||||

@ -33,4 +33,4 @@ Llama

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.14).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.14).

|

||||

|

||||

@ -25,4 +25,4 @@ import ChangelogHeader from "@/components/Changelog/ChangelogHeader"

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.15).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.15).

|

||||

|

||||

@ -26,4 +26,4 @@ import ChangelogHeader from "@/components/Changelog/ChangelogHeader"

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.16).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.16).

|

||||

|

||||

@ -20,4 +20,4 @@ import ChangelogHeader from "@/components/Changelog/ChangelogHeader"

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.17).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.17).

|

||||

|

||||

@ -18,4 +18,4 @@ import ChangelogHeader from "@/components/Changelog/ChangelogHeader"

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.6.1).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.6.1).

|

||||

@ -18,4 +18,4 @@ import ChangelogHeader from "@/components/Changelog/ChangelogHeader"

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.6.3).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.6.3).

|

||||

@ -23,4 +23,4 @@ new MCP examples.

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.6.5).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.6.5).

|

||||

@ -116,4 +116,4 @@ integrations. Stay tuned!

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For the complete list of changes, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.6.6).

|

||||

For the complete list of changes, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.6.6).

|

||||

|

||||

@ -89,4 +89,4 @@ We're continuing to optimize performance for large models, expand MCP integratio

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For the complete list of changes, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.6.7).

|

||||

For the complete list of changes, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.6.7).

|

||||

|

||||

@ -74,4 +74,4 @@ v0.6.8 focuses on stability and real workflows: major llama.cpp hardening, two n

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For the complete list of changes, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.6.8).

|

||||

For the complete list of changes, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.6.8).

|

||||

|

||||

@ -1,28 +0,0 @@

|

||||

---

|

||||

title: "Jan v0.7.0: Jan Projects"

|

||||

version: 0.7.0

|

||||

description: "Jan v0.7.0 introduces Projects, model renaming, llama.cpp auto-tuning, model stats, and Azure support."

|

||||

date: 2025-10-02

|

||||

ogImage: "/assets/images/changelog/jan-release-v0.7.0.jpeg"

|

||||

---

|

||||

|

||||

import ChangelogHeader from "@/components/Changelog/ChangelogHeader"

|

||||

import { Callout } from 'nextra/components'

|

||||

|

||||

<ChangelogHeader title="Jan v0.7.0" date="2025-10-01" ogImage="/assets/images/changelog/jan-release-v0.7.0.jpeg" />

|

||||

|

||||

## Jan v0.7.0: Jan Projects

|

||||

|

||||

Jan v0.7.0 is live! This release focuses on helping you organize your workspace and better understand how models run.

|

||||

|

||||

### What’s new

|

||||

- **Projects**: Group related chats under one project for a cleaner workflow.

|

||||

- **Rename models**: Give your models custom names for easier identification.

|

||||

- **Model context stats**: See context usage when a model runs.

|

||||

- **Auto-loaded cloud models**: Cloud model names now appear automatically.

|

||||

|

||||

---

|

||||

|

||||

Update your Jan or [download the latest version](https://jan.ai/).

|

||||

|

||||

For the complete list of changes, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.7.0).

|

||||

@ -1,26 +0,0 @@

|

||||

---

|

||||

title: "Jan v0.7.1: Fixes Windows Version Revert & OpenRouter Models"

|

||||

version: 0.7.1

|

||||

description: "Jan v0.7.1 focuses on bug fixes, including a windows version revert and improvements to OpenRouter models."

|

||||

date: 2025-10-03

|

||||

---

|

||||

|

||||

import ChangelogHeader from "@/components/Changelog/ChangelogHeader"

|

||||

import { Callout } from 'nextra/components'

|

||||

|

||||

<ChangelogHeader title="Jan v0.7.1" date="2025-10-03" />

|

||||

|

||||

### Bug Fixes: Windows Version Revert & OpenRouter Models

|

||||

|

||||

#### Two quick fixes:

|

||||

- Jan no longer reverts to an older version on load

|

||||

- OpenRouter can now add models again

|

||||

- Add headers for anthropic request to fetch models

|

||||

|

||||

---

|

||||

|

||||

Update your Jan or [download the latest version](https://jan.ai/).

|

||||

|

||||

For the complete list of changes, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.7.1).

|

||||

|

||||

|

||||

@ -1,25 +0,0 @@

|

||||

---

|

||||

title: "Jan v0.7.2: Security Update"

|

||||

version: 0.7.2

|

||||

description: "Jan v0.7.2 updates the happy-dom dependency to v20.0.0 to address a recently disclosed sandbox vulnerability."

|

||||

date: 2025-10-16

|

||||

---

|

||||

|

||||

import ChangelogHeader from "@/components/Changelog/ChangelogHeader"

|

||||

import { Callout } from 'nextra/components'

|

||||

|

||||

<ChangelogHeader title="Jan v0.7.2" date="2025-10-16" />

|

||||

|

||||

## Jan v0.7.2: Security Update (happy-dom v20)

|

||||

|

||||

This release focuses on **security and stability improvements**.

|

||||

It updates the `happy-dom` dependency to the latest version to address a recently disclosed vulnerability.

|

||||

|

||||

### Security Fix

|

||||

- Updated `happy-dom` to **^20.0.0**, preventing untrusted JavaScript executed within HAPPY DOM from accessing process-level functions and executing arbitrary code outside the intended sandbox.

|

||||

|

||||

---

|

||||

|

||||

Update your Jan or [download the latest version](https://jan.ai/).

|

||||

|

||||

For the complete list of changes, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.7.2).

|

||||

@ -41,7 +41,7 @@ Jan is an open-source replacement for ChatGPT:

|

||||

|

||||

Jan is a full [product suite](https://en.wikipedia.org/wiki/Software_suite) that offers an alternative to Big AI:

|

||||

- [Jan Desktop](/docs/desktop/quickstart): macOS, Windows, and Linux apps with offline mode

|

||||

- [Jan Web](https://chat.menlo.ai): Jan on browser, a direct alternative to chatgpt.com

|

||||

- [Jan Web](https://chat.jan.ai): Jan on browser, a direct alternative to chatgpt.com

|

||||

- Jan Mobile: iOS and Android apps (Coming Soon)

|

||||

- [Jan Server](/docs/server): deploy locally, in your cloud, or on-prem

|

||||

- [Jan Models](/docs/models): Open-source models optimized for deep research, tool use, and reasoning

|

||||

|

||||

@ -135,5 +135,5 @@ Min-p: 0.0

|

||||

|

||||

## 🤝 Community & Support

|

||||

- **Discussions**: [HuggingFace Community](https://huggingface.co/Menlo/Jan-nano-128k/discussions)

|

||||

- **Issues**: [GitHub Repository](https://github.com/janhq/deep-research/issues)

|

||||

- **Issues**: [GitHub Repository](https://github.com/menloresearch/deep-research/issues)

|

||||

- **Discord**: Join our research community for tips and best practices

|

||||

|

||||

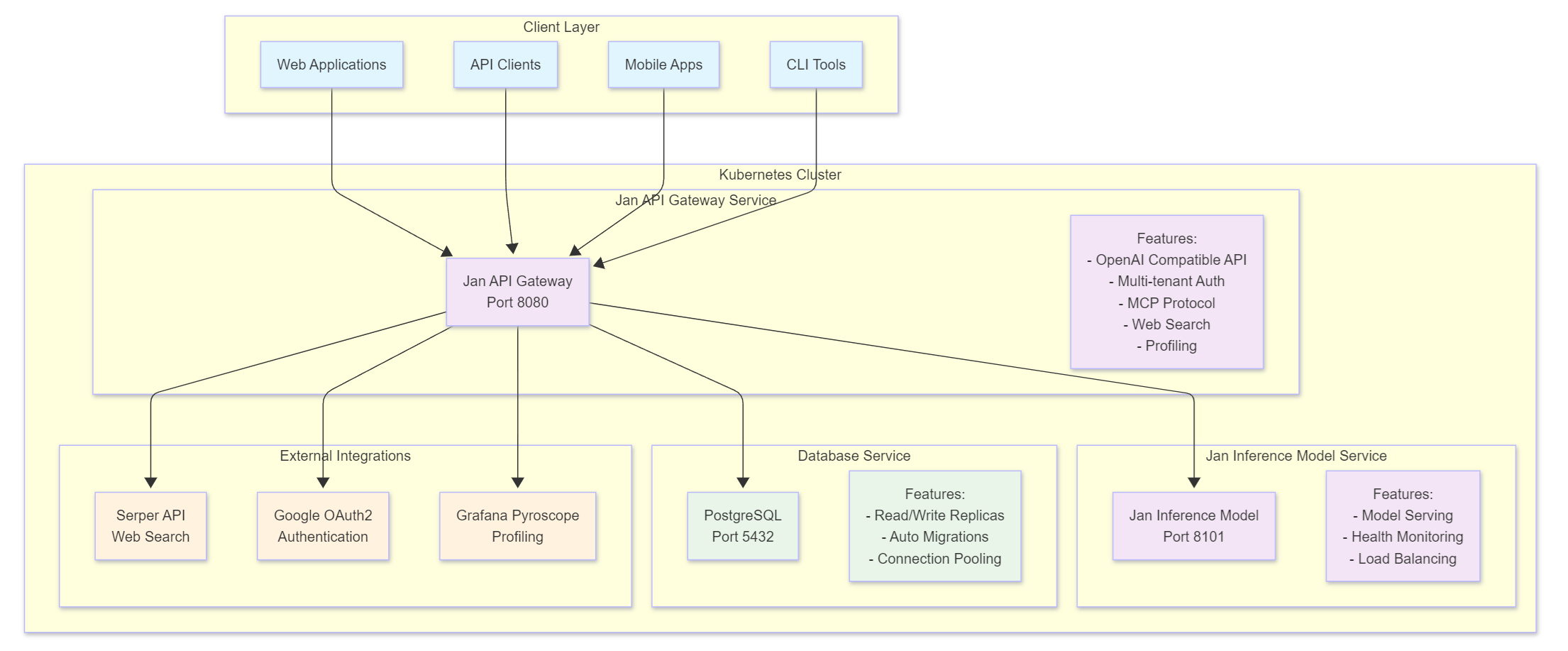

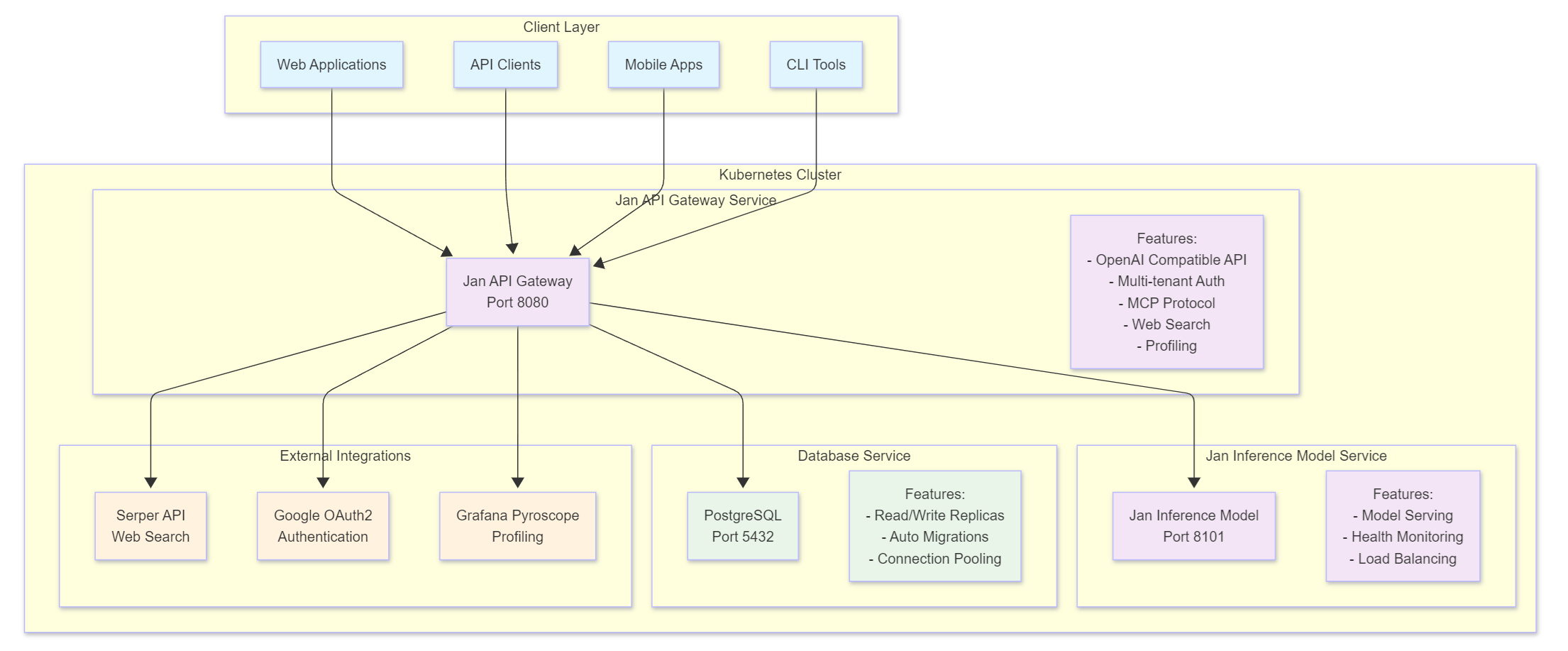

@ -9,7 +9,7 @@ Jan Server is a comprehensive self-hosted AI server platform that provides OpenA

|

||||

|

||||

Jan Server is a Kubernetes-native platform consisting of multiple microservices that work together to provide a complete AI infrastructure solution. It offers:

|

||||

|

||||

|

||||

|

||||

|

||||

### Key Features

|

||||

- **OpenAI-Compatible API**: Full compatibility with OpenAI's chat completion API

|

||||

|

||||

@ -3,7 +3,7 @@ title: Development

|

||||

description: Development setup, workflow, and contribution guidelines for Jan Server.

|

||||

---

|

||||

## Core Domain Models

|

||||

|

||||

|

||||

## Development Setup

|

||||

|

||||

### Prerequisites

|

||||

@ -42,7 +42,7 @@ description: Development setup, workflow, and contribution guidelines for Jan Se

|

||||

|

||||

1. **Clone Repository**

|

||||

```bash

|

||||

git clone https://github.com/janhq/jan-server

|

||||

git clone https://github.com/menloresearch/jan-server

|

||||

cd jan-server

|

||||

```

|

||||

|

||||

|

||||

@ -40,7 +40,7 @@ Jan Server is a Kubernetes-native platform consisting of multiple microservices

|

||||

- **Monitoring & Profiling**: Built-in performance monitoring and health checks

|

||||

|

||||

## System Architecture

|

||||

|

||||

|

||||

## Services

|

||||

|

||||

### Jan API Gateway

|

||||

|

||||

@ -19,7 +19,7 @@ keywords:

|

||||

import Download from "@/components/Download"

|

||||

|

||||