Compare commits

33 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

083553e1e2 | ||

|

|

39917920bd | ||

|

|

cde8e54fdd | ||

|

|

5b6feb7973 | ||

|

|

370527bb50 | ||

|

|

22645549ce | ||

|

|

289dc2b6d3 | ||

|

|

475eede903 | ||

|

|

9a8aa07094 | ||

|

|

efccec0bd7 | ||

|

|

47fcdfd90f | ||

|

|

cdfcbd0a2b | ||

|

|

b238fbcd41 | ||

|

|

efdd1b3971 | ||

|

|

b3c3cc8f26 | ||

|

|

3668bfb14f | ||

|

|

bc8ff74e98 | ||

|

|

2367c156e2 | ||

|

|

494db746f7 | ||

|

|

94bfad8d27 | ||

|

|

685054c5bc | ||

|

|

7413f1354f | ||

|

|

14768a6ed6 | ||

|

|

664f304631 | ||

|

|

a8df33c0dc | ||

|

|

5a481b5022 | ||

|

|

2b6f581f9a | ||

|

|

e88b8baf19 | ||

|

|

8894d72e6b | ||

|

|

b36fb2dd73 | ||

|

|

9a0c16a126 | ||

|

|

4ef21545a4 | ||

|

|

4368eb2893 |

2

.github/ISSUE_TEMPLATE/config.yml

vendored

2

.github/ISSUE_TEMPLATE/config.yml

vendored

@ -1,5 +1,5 @@

|

||||

blank_issues_enabled: true

|

||||

contact_links:

|

||||

- name: Jan Discussions

|

||||

url: https://github.com/orgs/janhq/discussions/categories/q-a

|

||||

url: https://github.com/orgs/menloresearch/discussions/categories/q-a

|

||||

about: Get help, discuss features & roadmap, and share your projects

|

||||

|

||||

108

.github/workflows/jan-tauri-build-nightly.yaml

vendored

108

.github/workflows/jan-tauri-build-nightly.yaml

vendored

@ -168,62 +168,62 @@ jobs:

|

||||

AWS_DEFAULT_REGION: ${{ secrets.DELTA_AWS_REGION }}

|

||||

AWS_EC2_METADATA_DISABLED: 'true'

|

||||

|

||||

# noti-discord-nightly-and-update-url-readme:

|

||||

# needs:

|

||||

# [

|

||||

# build-macos,

|

||||

# build-windows-x64,

|

||||

# build-linux-x64,

|

||||

# get-update-version,

|

||||

# set-public-provider,

|

||||

# sync-temp-to-latest,

|

||||

# ]

|

||||

# secrets: inherit

|

||||

# if: github.event_name == 'schedule'

|

||||

# uses: ./.github/workflows/template-noti-discord-and-update-url-readme.yml

|

||||

# with:

|

||||

# ref: refs/heads/dev

|

||||

# build_reason: Nightly

|

||||

# push_to_branch: dev

|

||||

# new_version: ${{ needs.get-update-version.outputs.new_version }}

|

||||

noti-discord-nightly-and-update-url-readme:

|

||||

needs:

|

||||

[

|

||||

build-macos,

|

||||

build-windows-x64,

|

||||

build-linux-x64,

|

||||

get-update-version,

|

||||

set-public-provider,

|

||||

sync-temp-to-latest,

|

||||

]

|

||||

secrets: inherit

|

||||

if: github.event_name == 'schedule'

|

||||

uses: ./.github/workflows/template-noti-discord-and-update-url-readme.yml

|

||||

with:

|

||||

ref: refs/heads/dev

|

||||

build_reason: Nightly

|

||||

push_to_branch: dev

|

||||

new_version: ${{ needs.get-update-version.outputs.new_version }}

|

||||

|

||||

# noti-discord-pre-release-and-update-url-readme:

|

||||

# needs:

|

||||

# [

|

||||

# build-macos,

|

||||

# build-windows-x64,

|

||||

# build-linux-x64,

|

||||

# get-update-version,

|

||||

# set-public-provider,

|

||||

# sync-temp-to-latest,

|

||||

# ]

|

||||

# secrets: inherit

|

||||

# if: github.event_name == 'push'

|

||||

# uses: ./.github/workflows/template-noti-discord-and-update-url-readme.yml

|

||||

# with:

|

||||

# ref: refs/heads/dev

|

||||

# build_reason: Pre-release

|

||||

# push_to_branch: dev

|

||||

# new_version: ${{ needs.get-update-version.outputs.new_version }}

|

||||

noti-discord-pre-release-and-update-url-readme:

|

||||

needs:

|

||||

[

|

||||

build-macos,

|

||||

build-windows-x64,

|

||||

build-linux-x64,

|

||||

get-update-version,

|

||||

set-public-provider,

|

||||

sync-temp-to-latest,

|

||||

]

|

||||

secrets: inherit

|

||||

if: github.event_name == 'push'

|

||||

uses: ./.github/workflows/template-noti-discord-and-update-url-readme.yml

|

||||

with:

|

||||

ref: refs/heads/dev

|

||||

build_reason: Pre-release

|

||||

push_to_branch: dev

|

||||

new_version: ${{ needs.get-update-version.outputs.new_version }}

|

||||

|

||||

# noti-discord-manual-and-update-url-readme:

|

||||

# needs:

|

||||

# [

|

||||

# build-macos,

|

||||

# build-windows-x64,

|

||||

# build-linux-x64,

|

||||

# get-update-version,

|

||||

# set-public-provider,

|

||||

# sync-temp-to-latest,

|

||||

# ]

|

||||

# secrets: inherit

|

||||

# if: github.event_name == 'workflow_dispatch' && github.event.inputs.public_provider == 'aws-s3'

|

||||

# uses: ./.github/workflows/template-noti-discord-and-update-url-readme.yml

|

||||

# with:

|

||||

# ref: refs/heads/dev

|

||||

# build_reason: Manual

|

||||

# push_to_branch: dev

|

||||

# new_version: ${{ needs.get-update-version.outputs.new_version }}

|

||||

noti-discord-manual-and-update-url-readme:

|

||||

needs:

|

||||

[

|

||||

build-macos,

|

||||

build-windows-x64,

|

||||

build-linux-x64,

|

||||

get-update-version,

|

||||

set-public-provider,

|

||||

sync-temp-to-latest,

|

||||

]

|

||||

secrets: inherit

|

||||

if: github.event_name == 'workflow_dispatch' && github.event.inputs.public_provider == 'aws-s3'

|

||||

uses: ./.github/workflows/template-noti-discord-and-update-url-readme.yml

|

||||

with:

|

||||

ref: refs/heads/dev

|

||||

build_reason: Manual

|

||||

push_to_branch: dev

|

||||

new_version: ${{ needs.get-update-version.outputs.new_version }}

|

||||

|

||||

comment-pr-build-url:

|

||||

needs:

|

||||

|

||||

6

.github/workflows/jan-tauri-build.yaml

vendored

6

.github/workflows/jan-tauri-build.yaml

vendored

@ -82,11 +82,11 @@ jobs:

|

||||

VERSION=${{ needs.get-update-version.outputs.new_version }}

|

||||

PUB_DATE=$(date -u +"%Y-%m-%dT%H:%M:%S.%3NZ")

|

||||

LINUX_SIGNATURE="${{ needs.build-linux-x64.outputs.APPIMAGE_SIG }}"

|

||||

LINUX_URL="https://github.com/janhq/jan/releases/download/v${{ needs.get-update-version.outputs.new_version }}/${{ needs.build-linux-x64.outputs.APPIMAGE_FILE_NAME }}"

|

||||

LINUX_URL="https://github.com/menloresearch/jan/releases/download/v${{ needs.get-update-version.outputs.new_version }}/${{ needs.build-linux-x64.outputs.APPIMAGE_FILE_NAME }}"

|

||||

WINDOWS_SIGNATURE="${{ needs.build-windows-x64.outputs.WIN_SIG }}"

|

||||

WINDOWS_URL="https://github.com/janhq/jan/releases/download/v${{ needs.get-update-version.outputs.new_version }}/${{ needs.build-windows-x64.outputs.FILE_NAME }}"

|

||||

WINDOWS_URL="https://github.com/menloresearch/jan/releases/download/v${{ needs.get-update-version.outputs.new_version }}/${{ needs.build-windows-x64.outputs.FILE_NAME }}"

|

||||

DARWIN_SIGNATURE="${{ needs.build-macos.outputs.MAC_UNIVERSAL_SIG }}"

|

||||

DARWIN_URL="https://github.com/janhq/jan/releases/download/v${{ needs.get-update-version.outputs.new_version }}/${{ needs.build-macos.outputs.TAR_NAME }}"

|

||||

DARWIN_URL="https://github.com/menloresearch/jan/releases/download/v${{ needs.get-update-version.outputs.new_version }}/${{ needs.build-macos.outputs.TAR_NAME }}"

|

||||

|

||||

jq --arg version "$VERSION" \

|

||||

--arg pub_date "$PUB_DATE" \

|

||||

|

||||

@ -29,7 +29,7 @@ jobs:

|

||||

local max_retries=3

|

||||

local tag

|

||||

while [ $retries -lt $max_retries ]; do

|

||||

tag=$(curl -s https://api.github.com/repos/janhq/jan/releases/latest | jq -r .tag_name)

|

||||

tag=$(curl -s https://api.github.com/repos/menloresearch/jan/releases/latest | jq -r .tag_name)

|

||||

if [ -n "$tag" ] && [ "$tag" != "null" ]; then

|

||||

echo $tag

|

||||

return

|

||||

|

||||

@ -50,6 +50,6 @@ jobs:

|

||||

- macOS Universal: https://delta.jan.ai/nightly/Jan-nightly_{{ VERSION }}_universal.dmg

|

||||

- Linux Deb: https://delta.jan.ai/nightly/Jan-nightly_{{ VERSION }}_amd64.deb

|

||||

- Linux AppImage: https://delta.jan.ai/nightly/Jan-nightly_{{ VERSION }}_amd64.AppImage

|

||||

- Github action run: https://github.com/janhq/jan/actions/runs/{{ GITHUB_RUN_ID }}

|

||||

- Github action run: https://github.com/menloresearch/jan/actions/runs/{{ GITHUB_RUN_ID }}

|

||||

env:

|

||||

DISCORD_WEBHOOK: ${{ secrets.DISCORD_WEBHOOK }}

|

||||

|

||||

@ -143,7 +143,7 @@ jan/

|

||||

|

||||

**Option 1: The Easy Way (Make)**

|

||||

```bash

|

||||

git clone https://github.com/janhq/jan

|

||||

git clone https://github.com/menloresearch/jan

|

||||

cd jan

|

||||

make dev

|

||||

```

|

||||

@ -152,8 +152,8 @@ make dev

|

||||

|

||||

### Reporting Bugs

|

||||

|

||||

- **Ensure the bug was not already reported** by searching on GitHub under [Issues](https://github.com/janhq/jan/issues)

|

||||

- If you're unable to find an open issue addressing the problem, [open a new one](https://github.com/janhq/jan/issues/new)

|

||||

- **Ensure the bug was not already reported** by searching on GitHub under [Issues](https://github.com/menloresearch/jan/issues)

|

||||

- If you're unable to find an open issue addressing the problem, [open a new one](https://github.com/menloresearch/jan/issues/new)

|

||||

- Include your system specs and error logs - it helps a ton

|

||||

|

||||

### Suggesting Enhancements

|

||||

|

||||

@ -28,7 +28,6 @@ COPY ./Makefile ./Makefile

|

||||

COPY ./.* /

|

||||

COPY ./package.json ./package.json

|

||||

COPY ./yarn.lock ./yarn.lock

|

||||

COPY ./pre-install ./pre-install

|

||||

COPY ./core ./core

|

||||

|

||||

# Build web application

|

||||

|

||||

16

README.md

16

README.md

@ -4,10 +4,10 @@

|

||||

|

||||

<p align="center">

|

||||

<!-- ALL-CONTRIBUTORS-BADGE:START - Do not remove or modify this section -->

|

||||

<img alt="GitHub commit activity" src="https://img.shields.io/github/commit-activity/m/janhq/jan"/>

|

||||

<img alt="Github Last Commit" src="https://img.shields.io/github/last-commit/janhq/jan"/>

|

||||

<img alt="Github Contributors" src="https://img.shields.io/github/contributors/janhq/jan"/>

|

||||

<img alt="GitHub closed issues" src="https://img.shields.io/github/issues-closed/janhq/jan"/>

|

||||

<img alt="GitHub commit activity" src="https://img.shields.io/github/commit-activity/m/menloresearch/jan"/>

|

||||

<img alt="Github Last Commit" src="https://img.shields.io/github/last-commit/menloresearch/jan"/>

|

||||

<img alt="Github Contributors" src="https://img.shields.io/github/contributors/menloresearch/jan"/>

|

||||

<img alt="GitHub closed issues" src="https://img.shields.io/github/issues-closed/menloresearch/jan"/>

|

||||

<img alt="Discord" src="https://img.shields.io/discord/1107178041848909847?label=discord"/>

|

||||

</p>

|

||||

|

||||

@ -15,7 +15,7 @@

|

||||

<a href="https://www.jan.ai/docs/desktop">Getting Started</a>

|

||||

- <a href="https://discord.gg/Exe46xPMbK">Community</a>

|

||||

- <a href="https://jan.ai/changelog">Changelog</a>

|

||||

- <a href="https://github.com/janhq/jan/issues">Bug reports</a>

|

||||

- <a href="https://github.com/menloresearch/jan/issues">Bug reports</a>

|

||||

</p>

|

||||

|

||||

Jan is bringing the best of open-source AI in an easy-to-use product. Download and run LLMs with **full control** and **privacy**.

|

||||

@ -48,7 +48,7 @@ The easiest way to get started is by downloading one of the following versions f

|

||||

</table>

|

||||

|

||||

|

||||

Download from [jan.ai](https://jan.ai/) or [GitHub Releases](https://github.com/janhq/jan/releases).

|

||||

Download from [jan.ai](https://jan.ai/) or [GitHub Releases](https://github.com/menloresearch/jan/releases).

|

||||

|

||||

## Features

|

||||

|

||||

@ -73,7 +73,7 @@ For those who enjoy the scenic route:

|

||||

### Run with Make

|

||||

|

||||

```bash

|

||||

git clone https://github.com/janhq/jan

|

||||

git clone https://github.com/menloresearch/jan

|

||||

cd jan

|

||||

make dev

|

||||

```

|

||||

@ -128,7 +128,7 @@ Contributions welcome. See [CONTRIBUTING.md](CONTRIBUTING.md) for the full spiel

|

||||

|

||||

## Contact

|

||||

|

||||

- **Bugs**: [GitHub Issues](https://github.com/janhq/jan/issues)

|

||||

- **Bugs**: [GitHub Issues](https://github.com/menloresearch/jan/issues)

|

||||

- **Business**: hello@jan.ai

|

||||

- **Jobs**: hr@jan.ai

|

||||

- **General Discussion**: [Discord](https://discord.gg/FTk2MvZwJH)

|

||||

|

||||

50

WEB_VERSION_TRACKER.md

Normal file

50

WEB_VERSION_TRACKER.md

Normal file

@ -0,0 +1,50 @@

|

||||

# Jan Web Version Tracker

|

||||

|

||||

Internal tracker for web component changes and features.

|

||||

|

||||

## v0.0.13 (current)

|

||||

**Release Date**: 2025-10-24

|

||||

**Commit SHA**: 22645549cea48b1ae24b5b9dc70411fd3bfc9935

|

||||

|

||||

**Main Features**:

|

||||

- Migrate auth to platform menlo

|

||||

- Remove conv prefix

|

||||

- Disable Project for web

|

||||

- Model capabilites are fetched correctly from model catalog

|

||||

|

||||

## v0.0.12

|

||||

**Release Date**: 2025-10-02

|

||||

**Commit SHA**: df145d63a93bd27336b5b539ce0719fe9c7719e3

|

||||

|

||||

**Main Features**:

|

||||

- Search button instead of tools

|

||||

- Projects support properly for local used

|

||||

- Temporary chat mode

|

||||

- Performance enhancement: prevent thread items over fetching on app start

|

||||

- Fix Google Tag

|

||||

|

||||

## v0.0.11

|

||||

**Release Date**: 2025-09-23

|

||||

**Commit SHA**: 494db746f7dd1f51241cec80bbf550901a0115e5

|

||||

|

||||

**Main Features**:

|

||||

- Google login support

|

||||

- Remote conversation and message persistent

|

||||

- UI improvements

|

||||

- Multiple tab synchronization on browser

|

||||

|

||||

## v0.0.10

|

||||

|

||||

**Release Date**: 2025-09-11

|

||||

**Commit SHA**: b5b6e1dc197378d06ccbf127f60e44779f1e44e5

|

||||

|

||||

**Main Features**:

|

||||

- Chat interface with completion route support

|

||||

- MCP (Model Context Protocol) integration

|

||||

- Core web functionality for Jan AI

|

||||

|

||||

**Changes**:

|

||||

- Initial web version release

|

||||

- Basic chat completion API integration

|

||||

- MCP server support for tool calling

|

||||

- Web-optimized UI components

|

||||

@ -1,7 +1,7 @@

|

||||

# Core dependencies

|

||||

cua-computer[all]~=0.3.5

|

||||

cua-agent[all]~=0.3.0

|

||||

cua-agent @ git+https://github.com/janhq/cua.git@compute-agent-0.3.0-patch#subdirectory=libs/python/agent

|

||||

cua-agent @ git+https://github.com/menloresearch/cua.git@compute-agent-0.3.0-patch#subdirectory=libs/python/agent

|

||||

|

||||

# ReportPortal integration

|

||||

reportportal-client~=5.6.5

|

||||

|

||||

@ -13,7 +13,7 @@ import * as core from '@janhq/core'

|

||||

|

||||

## Build an Extension

|

||||

|

||||

1. Download an extension template, for example, [https://github.com/janhq/extension-template](https://github.com/janhq/extension-template).

|

||||

1. Download an extension template, for example, [https://github.com/menloresearch/extension-template](https://github.com/menloresearch/extension-template).

|

||||

|

||||

2. Update the source code:

|

||||

|

||||

|

||||

@ -18,7 +18,7 @@ We try to **keep routes consistent** to maintain SEO.

|

||||

|

||||

## How to Contribute

|

||||

|

||||

Refer to the [Contributing Guide](https://github.com/janhq/jan/blob/main/CONTRIBUTING.md) for more comprehensive information on how to contribute to the Jan project.

|

||||

Refer to the [Contributing Guide](https://github.com/menloresearch/jan/blob/main/CONTRIBUTING.md) for more comprehensive information on how to contribute to the Jan project.

|

||||

|

||||

### Pre-requisites and Installation

|

||||

|

||||

|

||||

@ -1581,7 +1581,7 @@

|

||||

},

|

||||

"cover": {

|

||||

"type": "string",

|

||||

"example": "https://raw.githubusercontent.com/janhq/jan/main/models/trinity-v1.2-7b/cover.png"

|

||||

"example": "https://raw.githubusercontent.com/menloresearch/jan/main/models/trinity-v1.2-7b/cover.png"

|

||||

},

|

||||

"engine": {

|

||||

"type": "string",

|

||||

|

||||

@ -27,7 +27,7 @@ export const APIReference = () => {

|

||||

<ApiReferenceReact

|

||||

configuration={{

|

||||

spec: {

|

||||

url: 'https://raw.githubusercontent.com/janhq/docs/main/public/openapi/jan.json',

|

||||

url: 'https://raw.githubusercontent.com/menloresearch/docs/main/public/openapi/jan.json',

|

||||

},

|

||||

theme: 'alternate',

|

||||

hideModels: true,

|

||||

|

||||

@ -57,7 +57,7 @@ const Changelog = () => {

|

||||

<p className="text-base mt-2 leading-relaxed">

|

||||

Latest release updates from the Jan team. Check out our

|

||||

<a

|

||||

href="https://github.com/orgs/janhq/projects/30"

|

||||

href="https://github.com/orgs/menloresearch/projects/30"

|

||||

className="text-blue-600 dark:text-blue-400 cursor-pointer"

|

||||

>

|

||||

Roadmap

|

||||

@ -150,7 +150,7 @@ const Changelog = () => {

|

||||

|

||||

<div className="text-center">

|

||||

<Link

|

||||

href="https://github.com/janhq/jan/releases"

|

||||

href="https://github.com/menloresearch/jan/releases"

|

||||

target="_blank"

|

||||

className="dark:nx-bg-neutral-900 dark:text-white bg-black text-white hover:text-white justify-center dark:border dark:border-neutral-800 flex-shrink-0 px-4 py-3 rounded-xl inline-flex items-center"

|

||||

>

|

||||

|

||||

@ -72,7 +72,7 @@ export default function CardDownload({ lastRelease }: Props) {

|

||||

|

||||

return {

|

||||

...system,

|

||||

href: `https://github.com/janhq/jan/releases/download/${lastRelease.tag_name}/${downloadUrl}`,

|

||||

href: `https://github.com/menloresearch/jan/releases/download/${lastRelease.tag_name}/${downloadUrl}`,

|

||||

size: asset ? formatFileSize(asset.size) : undefined,

|

||||

}

|

||||

})

|

||||

|

||||

@ -139,7 +139,7 @@ const DropdownDownload = ({ lastRelease }: Props) => {

|

||||

|

||||

return {

|

||||

...system,

|

||||

href: `https://github.com/janhq/jan/releases/download/${lastRelease.tag_name}/${downloadUrl}`,

|

||||

href: `https://github.com/menloresearch/jan/releases/download/${lastRelease.tag_name}/${downloadUrl}`,

|

||||

size: asset ? formatFileSize(asset.size) : undefined,

|

||||

}

|

||||

})

|

||||

|

||||

@ -23,7 +23,7 @@ const BuiltWithLove = () => {

|

||||

</div>

|

||||

<div className="flex flex-col lg:flex-row gap-8 mt-8 items-center justify-center">

|

||||

<a

|

||||

href="https://github.com/janhq/jan"

|

||||

href="https://github.com/menloresearch/jan"

|

||||

target="_blank"

|

||||

className="dark:bg-white bg-black inline-flex w-56 px-4 py-3 rounded-xl cursor-pointer justify-center items-start space-x-4 "

|

||||

>

|

||||

|

||||

@ -44,7 +44,7 @@ const Hero = () => {

|

||||

<div className="mt-10 text-center">

|

||||

<div>

|

||||

<Link

|

||||

href="https://github.com/janhq/jan/releases"

|

||||

href="https://github.com/menloresearch/jan/releases"

|

||||

target="_blank"

|

||||

className="hidden lg:inline-block"

|

||||

>

|

||||

|

||||

@ -95,7 +95,7 @@ const Home = () => {

|

||||

<div className="container mx-auto relative z-10">

|

||||

<div className="flex justify-center items-center mt-14 lg:mt-20 px-4">

|

||||

<a

|

||||

href={`https://github.com/janhq/jan/releases/tag/${lastVersion}`}

|

||||

href={`https://github.com/menloresearch/jan/releases/tag/${lastVersion}`}

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

className="bg-black/40 px-3 lg:px-4 rounded-full h-10 inline-flex items-center max-w-full animate-fade-in delay-100"

|

||||

@ -270,7 +270,7 @@ const Home = () => {

|

||||

data-delay="600"

|

||||

>

|

||||

<a

|

||||

href="https://github.com/janhq/jan"

|

||||

href="https://github.com/menloresearch/jan"

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

>

|

||||

@ -387,7 +387,7 @@ const Home = () => {

|

||||

</div>

|

||||

<a

|

||||

className="hidden md:block"

|

||||

href="https://github.com/janhq/jan"

|

||||

href="https://github.com/menloresearch/jan"

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

>

|

||||

@ -413,7 +413,7 @@ const Home = () => {

|

||||

</p>

|

||||

<a

|

||||

className="md:hidden mt-4 block w-full"

|

||||

href="https://github.com/janhq/jan"

|

||||

href="https://github.com/menloresearch/jan"

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

>

|

||||

|

||||

@ -95,7 +95,7 @@ const Navbar = ({ noScroll }: { noScroll?: boolean }) => {

|

||||

})}

|

||||

<li>

|

||||

<a

|

||||

href="https://github.com/janhq/jan/releases/latest"

|

||||

href="https://github.com/menloresearch/jan/releases/latest"

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

>

|

||||

@ -141,7 +141,7 @@ const Navbar = ({ noScroll }: { noScroll?: boolean }) => {

|

||||

<FaLinkedinIn className="size-5" />

|

||||

</a>

|

||||

<a

|

||||

href="https://github.com/janhq/jan"

|

||||

href="https://github.com/menloresearch/jan"

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

className="rounded-lg flex items-center justify-center"

|

||||

@ -156,7 +156,7 @@ const Navbar = ({ noScroll }: { noScroll?: boolean }) => {

|

||||

{/* Mobile Download Button and Hamburger */}

|

||||

<div className="lg:hidden flex items-center gap-3">

|

||||

<a

|

||||

href="https://github.com/janhq/jan/releases/latest"

|

||||

href="https://github.com/menloresearch/jan/releases/latest"

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

>

|

||||

@ -278,7 +278,7 @@ const Navbar = ({ noScroll }: { noScroll?: boolean }) => {

|

||||

<FaLinkedinIn className="size-5" />

|

||||

</a>

|

||||

<a

|

||||

href="https://github.com/janhq/jan"

|

||||

href="https://github.com/menloresearch/jan"

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

className="text-black rounded-lg flex items-center justify-center"

|

||||

@ -296,7 +296,7 @@ const Navbar = ({ noScroll }: { noScroll?: boolean }) => {

|

||||

asChild

|

||||

>

|

||||

<a

|

||||

href="https://github.com/janhq/jan/releases/latest"

|

||||

href="https://github.com/menloresearch/jan/releases/latest"

|

||||

target="_blank"

|

||||

rel="noopener noreferrer"

|

||||

>

|

||||

|

||||

@ -120,7 +120,7 @@ export function DropdownButton({

|

||||

|

||||

return {

|

||||

...option,

|

||||

href: `https://github.com/janhq/jan/releases/download/${lastRelease.tag_name}/${fileName}`,

|

||||

href: `https://github.com/menloresearch/jan/releases/download/${lastRelease.tag_name}/${fileName}`,

|

||||

size: asset ? formatFileSize(asset.size) : 'N/A',

|

||||

}

|

||||

})

|

||||

|

||||

@ -18,7 +18,7 @@ description: Development setup, workflow, and contribution guidelines for Jan Se

|

||||

|

||||

1. **Clone Repository**

|

||||

```bash

|

||||

git clone https://github.com/janhq/jan-server

|

||||

git clone https://github.com/menloresearch/jan-server

|

||||

cd jan-server

|

||||

```

|

||||

|

||||

|

||||

@ -19,7 +19,7 @@ Jan Server currently supports minikube for local development. Production Kuberne

|

||||

|

||||

1. **Clone the repository**

|

||||

```bash

|

||||

git clone https://github.com/janhq/jan-server

|

||||

git clone https://github.com/menloresearch/jan-server

|

||||

cd jan-server

|

||||

```

|

||||

|

||||

|

||||

@ -24,4 +24,4 @@ Fixes 💫

|

||||

|

||||

Update your product or download the latest: https://jan.ai

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.5).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.5).

|

||||

@ -24,4 +24,4 @@ Jan now supports Mistral's new model Codestral. Thanks [Bartowski](https://huggi

|

||||

|

||||

More GGUF models can run in Jan - we rebased to llama.cpp b3012.Big thanks to [ggerganov](https://github.com/ggerganov)

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.0).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.0).

|

||||

|

||||

@ -28,4 +28,4 @@ Jan now understands LaTeX, allowing users to process and understand complex math

|

||||

|

||||

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.4.12).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.4.12).

|

||||

|

||||

@ -28,4 +28,4 @@ Users can now connect to OpenAI's new model GPT-4o.

|

||||

|

||||

|

||||

|

||||

For more details, see the [GitHub release notes.](https://github.com/janhq/jan/releases/tag/v0.4.13)

|

||||

For more details, see the [GitHub release notes.](https://github.com/menloresearch/jan/releases/tag/v0.4.13)

|

||||

|

||||

@ -16,4 +16,4 @@ More GGUF models can run in Jan - we rebased to llama.cpp b2961.

|

||||

|

||||

Huge shoutouts to [ggerganov](https://github.com/ggerganov) and contributors for llama.cpp, and [Bartowski](https://huggingface.co/bartowski) for GGUF models.

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.4.14).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.4.14).

|

||||

|

||||

@ -26,4 +26,4 @@ We've updated to llama.cpp b3088 for better performance - thanks to [GG](https:/

|

||||

- Reduced chat font weight (back to normal!)

|

||||

- Restored the maximize button

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.1).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.1).

|

||||

|

||||

@ -32,4 +32,4 @@ We've restored the tooltip hover functionality, which makes it easier to access

|

||||

|

||||

The right-click options for thread settings are now fully operational again. You can now manage your threads with this fix.

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.2).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.2).

|

||||

|

||||

@ -23,4 +23,4 @@ We've been working on stability issues over the last few weeks. Jan is now more

|

||||

- Fixed the GPU memory utilization bar

|

||||

- Some UX and copy improvements

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.3).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.3).

|

||||

|

||||

@ -32,4 +32,4 @@ Switching between threads used to reset your instruction settings. That’s fixe

|

||||

### Minor UI Tweaks & Bug Fixes

|

||||

We’ve also resolved issues with the input slider on the right panel and tackled several smaller bugs to keep everything running smoothly.

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.4).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.4).

|

||||

|

||||

@ -23,4 +23,4 @@ Fixes 💫

|

||||

|

||||

Update your product or download the latest: https://jan.ai

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.7).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.7).

|

||||

@ -22,4 +22,4 @@ Jan v0.5.9 is here: fixing what needed fixing

|

||||

|

||||

Update your product or download the latest: https://jan.ai

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.9).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.9).

|

||||

@ -22,4 +22,4 @@ and various UI/UX enhancements 💫

|

||||

|

||||

Update your product or download the latest: https://jan.ai

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.8).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.8).

|

||||

@ -19,4 +19,4 @@ Jan v0.5.10 is live: Jan is faster, smoother, and more reliable.

|

||||

|

||||

Update your product or download the latest: https://jan.ai

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.10).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.10).

|

||||

@ -23,4 +23,4 @@ Jan v0.5.11 is here - critical issues fixed, Mac installation updated.

|

||||

|

||||

Update your product or download the latest: https://jan.ai

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.11).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.11).

|

||||

@ -25,4 +25,4 @@ Jan v0.5.11 is here - critical issues fixed, Mac installation updated.

|

||||

|

||||

Update your product or download the latest: https://jan.ai

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.12).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.12).

|

||||

@ -20,4 +20,4 @@ import ChangelogHeader from "@/components/Changelog/ChangelogHeader"

|

||||

|

||||

Update your product or download the latest: https://jan.ai

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.13).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.13).

|

||||

|

||||

@ -33,4 +33,4 @@ Llama

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.14).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.14).

|

||||

|

||||

@ -25,4 +25,4 @@ import ChangelogHeader from "@/components/Changelog/ChangelogHeader"

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.15).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.15).

|

||||

|

||||

@ -26,4 +26,4 @@ import ChangelogHeader from "@/components/Changelog/ChangelogHeader"

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.16).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.16).

|

||||

|

||||

@ -20,4 +20,4 @@ import ChangelogHeader from "@/components/Changelog/ChangelogHeader"

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.5.17).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.5.17).

|

||||

|

||||

@ -18,4 +18,4 @@ import ChangelogHeader from "@/components/Changelog/ChangelogHeader"

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.6.1).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.6.1).

|

||||

@ -18,4 +18,4 @@ import ChangelogHeader from "@/components/Changelog/ChangelogHeader"

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.6.3).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.6.3).

|

||||

@ -23,4 +23,4 @@ new MCP examples.

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For more details, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.6.5).

|

||||

For more details, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.6.5).

|

||||

@ -116,4 +116,4 @@ integrations. Stay tuned!

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For the complete list of changes, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.6.6).

|

||||

For the complete list of changes, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.6.6).

|

||||

|

||||

@ -89,4 +89,4 @@ We're continuing to optimize performance for large models, expand MCP integratio

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For the complete list of changes, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.6.7).

|

||||

For the complete list of changes, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.6.7).

|

||||

|

||||

@ -74,4 +74,4 @@ v0.6.8 focuses on stability and real workflows: major llama.cpp hardening, two n

|

||||

|

||||

Update your Jan or [download the latest](https://jan.ai/).

|

||||

|

||||

For the complete list of changes, see the [GitHub release notes](https://github.com/janhq/jan/releases/tag/v0.6.8).

|

||||

For the complete list of changes, see the [GitHub release notes](https://github.com/menloresearch/jan/releases/tag/v0.6.8).

|

||||

|

||||

@ -135,5 +135,5 @@ Min-p: 0.0

|

||||

|

||||

## 🤝 Community & Support

|

||||

- **Discussions**: [HuggingFace Community](https://huggingface.co/Menlo/Jan-nano-128k/discussions)

|

||||

- **Issues**: [GitHub Repository](https://github.com/janhq/deep-research/issues)

|

||||

- **Issues**: [GitHub Repository](https://github.com/menloresearch/deep-research/issues)

|

||||

- **Discord**: Join our research community for tips and best practices

|

||||

|

||||

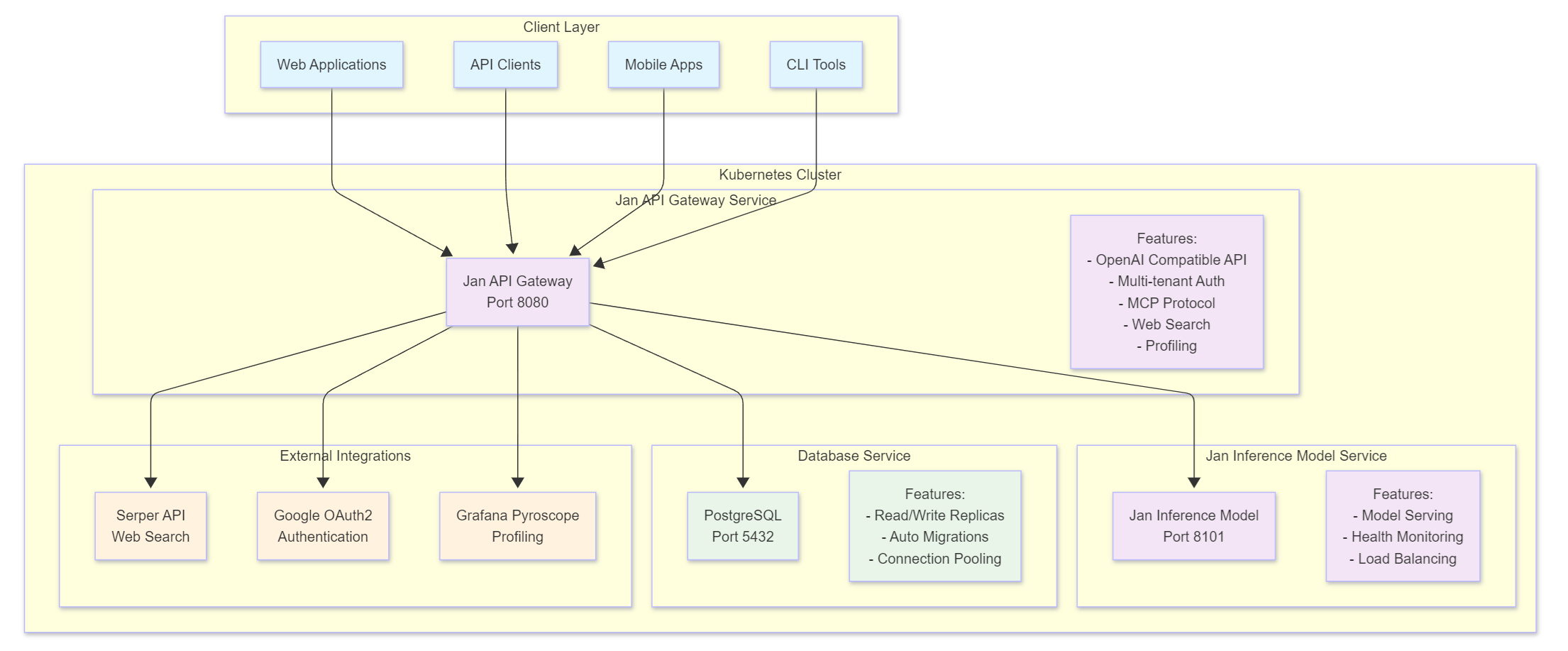

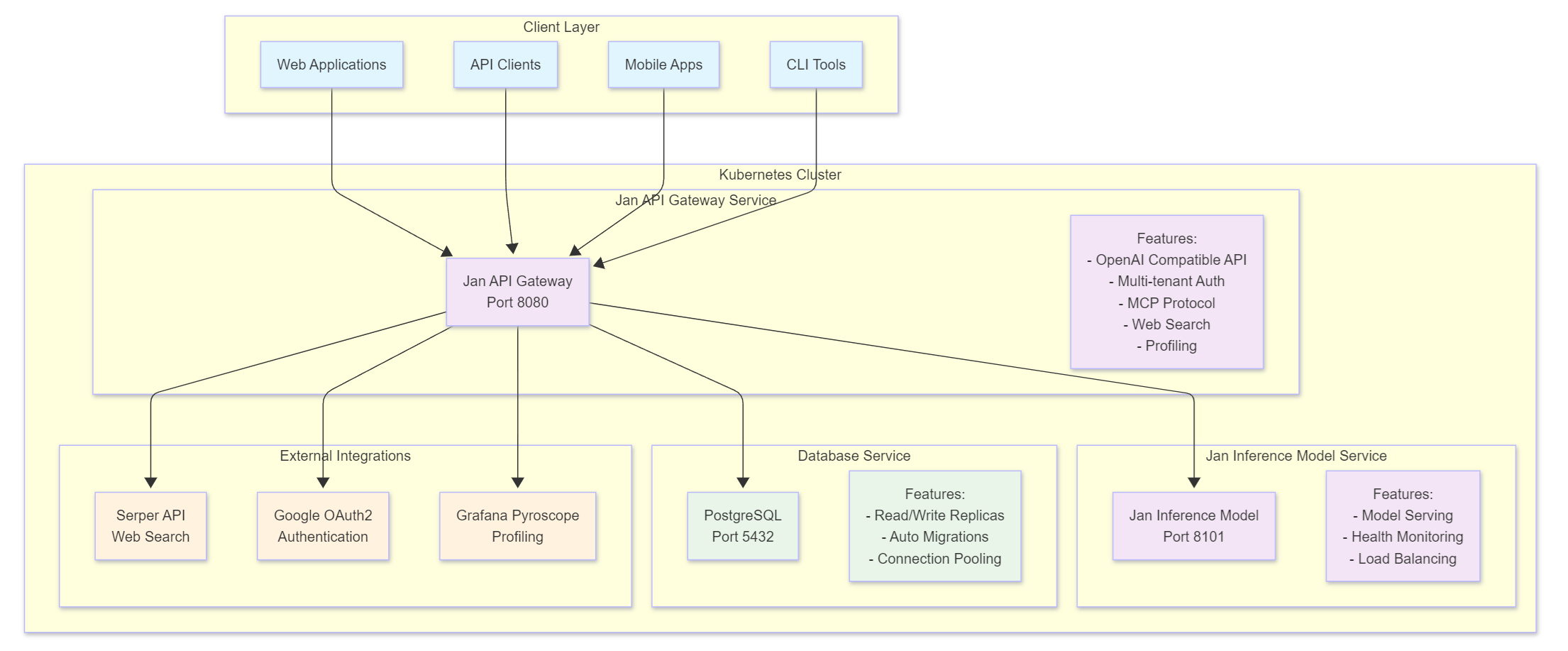

@ -9,7 +9,7 @@ Jan Server is a comprehensive self-hosted AI server platform that provides OpenA

|

||||

|

||||

Jan Server is a Kubernetes-native platform consisting of multiple microservices that work together to provide a complete AI infrastructure solution. It offers:

|

||||

|

||||

|

||||

|

||||

|

||||

### Key Features

|

||||

- **OpenAI-Compatible API**: Full compatibility with OpenAI's chat completion API

|

||||

|

||||

@ -3,7 +3,7 @@ title: Development

|

||||

description: Development setup, workflow, and contribution guidelines for Jan Server.

|

||||

---

|

||||

## Core Domain Models

|

||||

|

||||

|

||||

## Development Setup

|

||||

|

||||

### Prerequisites

|

||||

@ -42,7 +42,7 @@ description: Development setup, workflow, and contribution guidelines for Jan Se

|

||||

|

||||

1. **Clone Repository**

|

||||

```bash

|

||||

git clone https://github.com/janhq/jan-server

|

||||

git clone https://github.com/menloresearch/jan-server

|

||||

cd jan-server

|

||||

```

|

||||

|

||||

|

||||

@ -40,7 +40,7 @@ Jan Server is a Kubernetes-native platform consisting of multiple microservices

|

||||

- **Monitoring & Profiling**: Built-in performance monitoring and health checks

|

||||

|

||||

## System Architecture

|

||||

|

||||

|

||||

## Services

|

||||

|

||||

### Jan API Gateway

|

||||

|

||||

@ -19,7 +19,7 @@ keywords:

|

||||

import Download from "@/components/Download"

|

||||

|

||||

export const getStaticProps = async() => {

|

||||

const resRelease = await fetch('https://api.github.com/repos/janhq/jan/releases/latest')

|

||||

const resRelease = await fetch('https://api.github.com/repos/menloresearch/jan/releases/latest')

|

||||

const release = await resRelease.json()

|

||||

|

||||

return {

|

||||

|

||||

@ -19,9 +19,9 @@ keywords:

|

||||

import Home from "@/components/Home"

|

||||

|

||||

export const getStaticProps = async() => {

|

||||

const resReleaseLatest = await fetch('https://api.github.com/repos/janhq/jan/releases/latest')

|

||||

const resRelease = await fetch('https://api.github.com/repos/janhq/jan/releases?per_page=500')

|

||||

const resRepo = await fetch('https://api.github.com/repos/janhq/jan')

|

||||

const resReleaseLatest = await fetch('https://api.github.com/repos/menloresearch/jan/releases/latest')

|

||||

const resRelease = await fetch('https://api.github.com/repos/menloresearch/jan/releases?per_page=500')

|

||||

const resRepo = await fetch('https://api.github.com/repos/menloresearch/jan')

|

||||

const repo = await resRepo.json()

|

||||

const latestRelease = await resReleaseLatest.json()

|

||||

const release = await resRelease.json()

|

||||

|

||||

@ -14,12 +14,12 @@ import CTABlog from '@/components/Blog/CTA'

|

||||

|

||||

Jan now supports [NVIDIA TensorRT-LLM](https://github.com/NVIDIA/TensorRT-LLM) in addition to [llama.cpp](https://github.com/ggerganov/llama.cpp), making Jan multi-engine and ultra-fast for users with Nvidia GPUs.

|

||||

|

||||

We've been excited for TensorRT-LLM for a while, and [had a lot of fun implementing it](https://github.com/janhq/nitro-tensorrt-llm). As part of the process, we've run some benchmarks, to see how TensorRT-LLM fares on consumer hardware (e.g. [4090s](https://www.nvidia.com/en-us/geforce/graphics-cards/40-series/), [3090s](https://www.nvidia.com/en-us/geforce/graphics-cards/30-series/)) we commonly see in the [Jan's hardware community](https://discord.com/channels/1107178041848909847/1201834752206974996).

|

||||

We've been excited for TensorRT-LLM for a while, and [had a lot of fun implementing it](https://github.com/menloresearch/nitro-tensorrt-llm). As part of the process, we've run some benchmarks, to see how TensorRT-LLM fares on consumer hardware (e.g. [4090s](https://www.nvidia.com/en-us/geforce/graphics-cards/40-series/), [3090s](https://www.nvidia.com/en-us/geforce/graphics-cards/30-series/)) we commonly see in the [Jan's hardware community](https://discord.com/channels/1107178041848909847/1201834752206974996).

|

||||

|

||||

<Callout type="info" >

|

||||

**Give it a try!** Jan's TensorRT-LLM extension is available in Jan v0.4.9. We precompiled some TensorRT-LLM models for you to try: `Mistral 7b`, `TinyLlama-1.1b`, `TinyJensen-1.1b` 😂

|

||||

|

||||

Bugs or feedback? Let us know on [GitHub](https://github.com/janhq/jan) or via [Discord](https://discord.com/channels/1107178041848909847/1201832734704795688).

|

||||

Bugs or feedback? Let us know on [GitHub](https://github.com/menloresearch/jan) or via [Discord](https://discord.com/channels/1107178041848909847/1201832734704795688).

|

||||

</Callout>

|

||||

|

||||

<Callout type="info" >

|

||||

|

||||

@ -70,34 +70,34 @@ brief survey of how other players approach deep research:

|

||||

| Kimi | Interactive synthesis | 50–100 | 30–60+ | PDF, Interactive website | Free |

|

||||

|

||||

In our testing, we used the following prompt to assess the quality of the generated report by

|

||||

the providers above. You can refer to the reports generated [here](https://github.com/janhq/prompt-experiments).

|

||||

the providers above. You can refer to the reports generated [here](https://github.com/menloresearch/prompt-experiments).

|

||||

|

||||

```

|

||||

Generate a comprehensive report about the state of AI in the past week. Include all

|

||||

new model releases and notable architectural improvements from a variety of sources.

|

||||

```

|

||||

|

||||

[Google's generated report](https://github.com/janhq/prompt-experiments/blob/main/Gemini%202.5%20Flash%20Report.pdf) was the most verbose, with a whopping 23 pages that reads

|

||||

[Google's generated report](https://github.com/menloresearch/prompt-experiments/blob/main/Gemini%202.5%20Flash%20Report.pdf) was the most verbose, with a whopping 23 pages that reads

|

||||

like a professional intelligence briefing. It opens with an executive summary,

|

||||

systematically categorizes developments, and provides forward-looking strategic

|

||||

insights—connecting OpenAI's open-weight release to broader democratization trends

|

||||

and linking infrastructure investments to competitive positioning.

|

||||

|

||||

[OpenAI](https://github.com/janhq/prompt-experiments/blob/main/OpenAI%20Deep%20Research.pdf) produced the most citation-heavy output with 134 references throughout 10 pages

|

||||

[OpenAI](https://github.com/menloresearch/prompt-experiments/blob/main/OpenAI%20Deep%20Research.pdf) produced the most citation-heavy output with 134 references throughout 10 pages

|

||||

(albeit most of them being from the same source).

|

||||

|

||||

[Perplexity](https://github.com/janhq/prompt-experiments/blob/main/Perplexity%20Deep%20Research.pdf) delivered the most actionable 6-page report that maximizes information

|

||||

[Perplexity](https://github.com/menloresearch/prompt-experiments/blob/main/Perplexity%20Deep%20Research.pdf) delivered the most actionable 6-page report that maximizes information

|

||||

density while maintaining scannability. Despite being the shortest, it captures all

|

||||

major developments with sufficient context for decision-making.

|

||||

|

||||

[Claude](https://github.com/janhq/prompt-experiments/blob/main/Claude%20Deep%20Research.pdf) produced a comprehensive analysis that interestingly ignored the time constraint,

|

||||

[Claude](https://github.com/menloresearch/prompt-experiments/blob/main/Claude%20Deep%20Research.pdf) produced a comprehensive analysis that interestingly ignored the time constraint,

|

||||

covering an 8-month period from January-August 2025 instead of the requested week (Jul 31-Aug

|

||||

7th 2025). Rather than cataloging recent events, Claude traced the evolution of trends over months.

|

||||

|

||||

[Grok](https://github.com/janhq/prompt-experiments/blob/main/Grok%203%20Deep%20Research.pdf) produced a well-structured but relatively shallow 5-page academic-style report that

|

||||

[Grok](https://github.com/menloresearch/prompt-experiments/blob/main/Grok%203%20Deep%20Research.pdf) produced a well-structured but relatively shallow 5-page academic-style report that

|

||||

read more like an event catalog than strategic analysis.

|

||||

|

||||

[Kimi](https://github.com/janhq/prompt-experiments/blob/main/Kimi%20AI%20Deep%20Research.pdf) produced a comprehensive 13-page report with systematic organization covering industry developments, research breakthroughs, and policy changes, but notably lacks proper citations throughout most of the content despite claiming to use 50-100 sources.

|

||||

[Kimi](https://github.com/menloresearch/prompt-experiments/blob/main/Kimi%20AI%20Deep%20Research.pdf) produced a comprehensive 13-page report with systematic organization covering industry developments, research breakthroughs, and policy changes, but notably lacks proper citations throughout most of the content despite claiming to use 50-100 sources.

|

||||

|

||||

### Understanding Search Strategies

|

||||

|

||||

|

||||

@ -13,7 +13,7 @@ import CTABlog from '@/components/Blog/CTA'

|

||||

|

||||

## Abstract

|

||||

|

||||

We present a straightforward approach to customizing small, open-source models using fine-tuning and RAG that outperforms GPT-3.5 for specialized use cases. With it, we achieved superior Q&A results of [technical documentation](https://nitro.jan.ai/docs) for a small codebase [codebase](https://github.com/janhq/nitro).

|

||||

We present a straightforward approach to customizing small, open-source models using fine-tuning and RAG that outperforms GPT-3.5 for specialized use cases. With it, we achieved superior Q&A results of [technical documentation](https://nitro.jan.ai/docs) for a small codebase [codebase](https://github.com/menloresearch/nitro).

|

||||

|

||||

In short, (1) extending a general foundation model like [Mistral](https://huggingface.co/mistralai/Mistral-7B-v0.1) with strong math and coding, and (2) training it over a high-quality, synthetic dataset generated from the intended corpus, and (3) adding RAG capabilities, can lead to significant accuracy improvements.

|

||||

|

||||

@ -93,11 +93,11 @@ This final model can be found [here on Huggingface](https://huggingface.co/jan-h

|

||||

|

||||

As an additional step, we also added [Retrieval Augmented Generation (RAG)](https://blogs.nvidia.com/blog/what-is-retrieval-augmented-generation/) as an experiment parameter.

|

||||

|

||||

A simple RAG setup was done using **[Llamaindex](https://www.llamaindex.ai/)** and the **[bge-en-base-v1.5 embedding](https://huggingface.co/BAAI/bge-base-en-v1.5)** model for efficient documentation retrieval and question-answering. You can find the RAG implementation [here](https://github.com/janhq/open-foundry/blob/main/rag-is-not-enough/rag/nitro_rag.ipynb).

|

||||

A simple RAG setup was done using **[Llamaindex](https://www.llamaindex.ai/)** and the **[bge-en-base-v1.5 embedding](https://huggingface.co/BAAI/bge-base-en-v1.5)** model for efficient documentation retrieval and question-answering. You can find the RAG implementation [here](https://github.com/menloresearch/open-foundry/blob/main/rag-is-not-enough/rag/nitro_rag.ipynb).

|

||||

|

||||

## Benchmarking the Results

|

||||

|

||||

We curated a new set of [50 multiple-choice questions](https://github.com/janhq/open-foundry/blob/main/rag-is-not-enough/rag/mcq_nitro.csv) (MCQ) based on the Nitro docs. The questions had varying levels of difficulty and had trick components that challenged the model's ability to discern misleading information.

|

||||

We curated a new set of [50 multiple-choice questions](https://github.com/menloresearch/open-foundry/blob/main/rag-is-not-enough/rag/mcq_nitro.csv) (MCQ) based on the Nitro docs. The questions had varying levels of difficulty and had trick components that challenged the model's ability to discern misleading information.

|

||||

|

||||

|

||||

|

||||

@ -121,7 +121,7 @@ We conclude that this combination of model merging + finetuning + RAG yields pro

|

||||

|

||||

Anecdotally, we’ve had some success using this model in practice to onboard new team members to the Nitro codebase.

|

||||

|

||||

A full research report with more statistics can be found [here](https://github.com/janhq/open-foundry/blob/main/rag-is-not-enough/README.md).

|

||||

A full research report with more statistics can be found [here](https://github.com/menloresearch/open-foundry/blob/main/rag-is-not-enough/README.md).

|

||||

|

||||

# References

|

||||

|

||||

|

||||

@ -203,7 +203,7 @@ When to choose ChatGPT Plus instead:

|

||||

|

||||

Ready to try gpt-oss?

|

||||

- Download Jan: [https://jan.ai/](https://jan.ai/)

|

||||

- View source code: [https://github.com/janhq/jan](https://github.com/janhq/jan)

|

||||

- View source code: [https://github.com/menloresearch/jan](https://github.com/menloresearch/jan)

|

||||

- Need help? Check our [local AI guide](/post/run-ai-models-locally) for beginners

|

||||

|

||||

<CTABlog />

|

||||

@ -4,7 +4,7 @@ title: Support - Jan

|

||||

|

||||

# Support

|

||||

|

||||

- Bugs & requests: file a GitHub ticket [here](https://github.com/janhq/jan/issues)

|

||||

- Bugs & requests: file a GitHub ticket [here](https://github.com/menloresearch/jan/issues)

|

||||

- For discussion: join our Discord [here](https://discord.gg/FTk2MvZwJH)

|

||||

- For business inquiries: email hello@jan.ai

|

||||

- For jobs: please email hr@jan.ai

|

||||

@ -31,7 +31,7 @@ const config: DocsThemeConfig = {

|

||||

</div>

|

||||

</span>

|

||||

),

|

||||

docsRepositoryBase: 'https://github.com/janhq/jan/tree/dev/docs',

|

||||

docsRepositoryBase: 'https://github.com/menloresearch/jan/tree/dev/docs',

|

||||

feedback: {

|

||||

content: 'Question? Give us feedback →',

|

||||

labels: 'feedback',

|

||||

|

||||

@ -70,6 +70,6 @@ There are a few things to keep in mind when writing your extension code:

|

||||

```

|

||||

|

||||

For more information about the Jan Extension Core module, see the

|

||||

[documentation](https://github.com/janhq/jan/blob/main/core/README.md).

|

||||

[documentation](https://github.com/menloresearch/jan/blob/main/core/README.md).

|

||||

|

||||

So, what are you waiting for? Go ahead and start customizing your extension!

|

||||

|

||||

@ -56,7 +56,7 @@ async function fetchRemoteSupportedBackends(

|

||||

supportedBackends: string[]

|

||||

): Promise<{ version: string; backend: string }[]> {

|

||||

// Pull the latest releases from the repo

|

||||

const { releases } = await _fetchGithubReleases('janhq', 'llama.cpp')

|

||||

const { releases } = await _fetchGithubReleases('menloresearch', 'llama.cpp')

|

||||

releases.sort((a, b) => b.tag_name.localeCompare(a.tag_name))

|

||||

releases.splice(10) // keep only the latest 10 releases

|

||||

|

||||

@ -98,7 +98,7 @@ export async function listSupportedBackends(): Promise<

|

||||

const sysType = `${os_type}-${arch}`

|

||||

let supportedBackends = []

|

||||

|

||||

// NOTE: janhq's tags for llama.cpp builds are a bit different

|

||||

// NOTE: menloresearch's tags for llama.cpp builds are a bit different

|

||||

// TODO: fetch versions from the server?

|

||||

// TODO: select CUDA version based on driver version

|

||||

if (sysType == 'windows-x86_64') {

|

||||

@ -247,7 +247,7 @@ export async function downloadBackend(

|

||||

// Build URLs per source

|

||||

const backendUrl =

|

||||

source === 'github'

|

||||

? `https://github.com/janhq/llama.cpp/releases/download/${version}/llama-${version}-bin-${backend}.tar.gz`

|

||||

? `https://github.com/menloresearch/llama.cpp/releases/download/${version}/llama-${version}-bin-${backend}.tar.gz`

|

||||

: `https://catalog.jan.ai/llama.cpp/releases/${version}/llama-${version}-bin-${backend}.tar.gz`

|

||||

|

||||

const downloadItems = [

|

||||

@ -263,7 +263,7 @@ export async function downloadBackend(

|

||||

downloadItems.push({

|

||||

url:

|

||||

source === 'github'

|

||||

? `https://github.com/janhq/llama.cpp/releases/download/${version}/cudart-llama-bin-${platformName}-cu11.7-x64.tar.gz`

|

||||

? `https://github.com/menloresearch/llama.cpp/releases/download/${version}/cudart-llama-bin-${platformName}-cu11.7-x64.tar.gz`

|

||||

: `https://catalog.jan.ai/llama.cpp/releases/${version}/cudart-llama-bin-${platformName}-cu11.7-x64.tar.gz`,

|

||||

save_path: await joinPath([libDir, 'cuda11.tar.gz']),

|

||||

proxy: proxyConfig,

|

||||

@ -272,7 +272,7 @@ export async function downloadBackend(

|

||||

downloadItems.push({

|

||||

url:

|

||||

source === 'github'

|

||||

? `https://github.com/janhq/llama.cpp/releases/download/${version}/cudart-llama-bin-${platformName}-cu12.0-x64.tar.gz`

|

||||

? `https://github.com/menloresearch/llama.cpp/releases/download/${version}/cudart-llama-bin-${platformName}-cu12.0-x64.tar.gz`

|

||||

: `https://catalog.jan.ai/llama.cpp/releases/${version}/cudart-llama-bin-${platformName}-cu12.0-x64.tar.gz`,

|

||||

save_path: await joinPath([libDir, 'cuda12.tar.gz']),

|

||||

proxy: proxyConfig,

|

||||

|

||||

@ -35,7 +35,7 @@

|

||||

</screenshots>

|

||||

|

||||

<url type="homepage">https://jan.ai/</url>

|

||||

<url type="bugtracker">https://github.com/janhq/jan/issues</url>

|

||||

<url type="bugtracker">https://github.com/menloresearch/jan/issues</url>

|

||||

|

||||

<content_rating type="oars-1.1" />

|

||||

|

||||

|

||||

@ -4,7 +4,7 @@ version = "0.6.599"

|

||||

description = "Use offline LLMs with your own data. Run open source models like Llama2 or Falcon on your internal computers/servers."

|

||||

authors = ["Jan <service@jan.ai>"]

|

||||

license = "MIT"

|

||||

repository = "https://github.com/janhq/jan"

|

||||

repository = "https://github.com/menloresearch/jan"

|

||||

edition = "2021"

|

||||

rust-version = "1.77.2"

|

||||

resolver = "2"

|

||||

|

||||

@ -4,7 +4,7 @@ version = "0.6.599"

|

||||

authors = ["Jan <service@jan.ai>"]

|

||||

description = "Tauri plugin for hardware information and GPU monitoring"

|

||||

license = "MIT"

|

||||

repository = "https://github.com/janhq/jan"

|

||||

repository = "https://github.com/menloresearch/jan"

|

||||

edition = "2021"

|

||||

rust-version = "1.77.2"

|

||||

exclude = ["/examples", "/dist-js", "/guest-js", "/node_modules"]

|

||||

|

||||

@ -4,7 +4,7 @@ version = "0.6.599"

|

||||

authors = ["Jan <service@jan.ai>"]

|

||||

description = "Tauri plugin for managing Jan LlamaCpp server processes and model loading"

|

||||

license = "MIT"

|

||||

repository = "https://github.com/janhq/jan"

|

||||

repository = "https://github.com/menloresearch/jan"

|

||||

edition = "2021"

|

||||

rust-version = "1.77.2"

|

||||

exclude = ["/examples", "/dist-js", "/guest-js", "/node_modules"]

|

||||

|

||||

@ -4,7 +4,7 @@ version = "0.1.0"

|

||||

authors = ["Jan <service@jan.ai>"]

|

||||

description = "Tauri plugin for RAG utilities (document parsing, types)"

|

||||

license = "MIT"

|

||||

repository = "https://github.com/janhq/jan"

|

||||

repository = "https://github.com/menloresearch/jan"

|

||||

edition = "2021"

|

||||

rust-version = "1.77.2"

|

||||

exclude = ["/examples", "/dist-js", "/guest-js", "/node_modules"]

|

||||

|

||||

@ -4,7 +4,7 @@ version = "0.1.0"

|

||||

authors = ["Jan <service@jan.ai>"]

|

||||

description = "Tauri plugin for vector storage and similarity search"

|

||||

license = "MIT"

|

||||

repository = "https://github.com/janhq/jan"

|

||||

repository = "https://github.com/menloresearch/jan"

|

||||

edition = "2021"

|

||||

rust-version = "1.77.2"

|

||||

exclude = ["/examples", "/dist-js", "/guest-js", "/node_modules"]

|

||||

|

||||

@ -72,7 +72,7 @@

|

||||

"updater": {

|

||||

"pubkey": "dW50cnVzdGVkIGNvbW1lbnQ6IG1pbmlzaWduIHB1YmxpYyBrZXk6IDJFNDEzMEVCMUEzNUFENDQKUldSRXJUVWE2ekJCTGc1Mm1BVXgrWmtES3huUlBFR0lCdG5qbWFvMzgyNDhGN3VTTko5Q1NtTW0K",

|

||||

"endpoints": [

|

||||

"https://github.com/janhq/jan/releases/latest/download/latest.json"

|

||||

"https://github.com/menloresearch/jan/releases/latest/download/latest.json"

|

||||

],

|

||||

"windows": {

|

||||

"installMode": "passive"

|

||||

|

||||

@ -10,7 +10,6 @@ import {

|

||||

IconAtom,

|

||||

IconWorld,

|

||||

IconCodeCircle2,

|

||||

IconSparkles,

|

||||

} from '@tabler/icons-react'

|

||||

import { Fragment } from 'react/jsx-runtime'

|

||||

|

||||

@ -30,8 +29,6 @@ const Capabilities = ({ capabilities }: CapabilitiesProps) => {

|

||||

icon = <IconEye className="size-4" />

|

||||

} else if (capability === 'tools') {

|

||||

icon = <IconTool className="size-3.5" />

|

||||

} else if (capability === 'proactive') {

|

||||

icon = <IconSparkles className="size-3.5" />

|

||||

} else if (capability === 'reasoning') {

|

||||

icon = <IconAtom className="size-3.5" />

|

||||

} else if (capability === 'embeddings') {

|

||||

@ -57,11 +54,7 @@ const Capabilities = ({ capabilities }: CapabilitiesProps) => {

|

||||

</TooltipTrigger>

|

||||

<TooltipContent>

|

||||

<p>

|

||||

{capability === 'web_search'

|

||||

? 'Web Search'

|

||||

: capability === 'proactive'

|

||||

? 'Proactive'

|

||||

: capability}

|

||||

{capability === 'web_search' ? 'Web Search' : capability}

|

||||

</p>

|

||||

</TooltipContent>

|

||||

</Tooltip>

|

||||

|

||||

@ -16,8 +16,6 @@ const LANGUAGES = [

|

||||

{ value: 'zh-CN', label: '简体中文' },

|

||||

{ value: 'zh-TW', label: '繁體中文' },

|

||||

{ value: 'de-DE', label: 'Deutsch' },

|

||||

{ value: 'pt-BR', label: 'Português (Brasil)' },

|

||||

{ value: 'ja', label: '日本語' },

|

||||

]

|

||||

|

||||

export default function LanguageSwitcher() {

|

||||

|

||||

@ -152,19 +152,12 @@ export const ModelInfoHoverCard = ({

|

||||

</div>

|

||||

|

||||

{/* Features Section */}

|

||||

{(model.num_mmproj > 0 || model.tools || (model.num_mmproj > 0 && model.tools)) && (

|

||||

{(model.num_mmproj > 0 || model.tools) && (

|

||||

<div className="border-t border-main-view-fg/10 pt-3">

|

||||

<h5 className="text-xs font-medium text-main-view-fg/70 mb-2">

|

||||

Features

|

||||

</h5>

|

||||

<div className="flex flex-wrap gap-2">

|

||||

{model.tools && (

|

||||

<div className="flex items-center gap-1.5 px-2 py-1 bg-main-view-fg/10 rounded-md">

|

||||

<span className="text-xs text-main-view-fg font-medium">

|

||||

Tools

|

||||

</span>

|

||||

</div>

|

||||

)}

|

||||

{model.num_mmproj > 0 && (

|

||||

<div className="flex items-center gap-1.5 px-2 py-1 bg-main-view-fg/10 rounded-md">

|

||||

<span className="text-xs text-main-view-fg font-medium">

|

||||

@ -172,10 +165,10 @@ export const ModelInfoHoverCard = ({

|

||||

</span>

|

||||

</div>

|

||||

)}

|

||||

{model.num_mmproj > 0 && model.tools && (

|

||||

{model.tools && (

|

||||

<div className="flex items-center gap-1.5 px-2 py-1 bg-main-view-fg/10 rounded-md">

|

||||

<span className="text-xs text-main-view-fg font-medium">

|

||||

Proactive

|

||||

Tools

|

||||

</span>

|

||||

</div>

|

||||

)}

|

||||

|

||||

@ -1,124 +0,0 @@

|

||||

import { describe, it, expect, vi } from 'vitest'

|

||||

import { render, screen } from '@testing-library/react'

|

||||

import Capabilities from '../Capabilities'

|

||||

|

||||

// Mock Tooltip components

|

||||

vi.mock('@/components/ui/tooltip', () => ({

|

||||

Tooltip: ({ children }: { children: React.ReactNode }) => <div>{children}</div>,

|

||||

TooltipContent: ({ children }: { children: React.ReactNode }) => <div>{children}</div>,

|

||||

TooltipProvider: ({ children }: { children: React.ReactNode }) => <div>{children}</div>,

|

||||

TooltipTrigger: ({ children }: { children: React.ReactNode }) => <div>{children}</div>,

|

||||

}))

|

||||

|

||||

// Mock Tabler icons

|

||||

vi.mock('@tabler/icons-react', () => ({

|

||||

IconEye: () => <div data-testid="icon-eye">Eye Icon</div>,

|

||||

IconTool: () => <div data-testid="icon-tool">Tool Icon</div>,

|

||||

IconSparkles: () => <div data-testid="icon-sparkles">Sparkles Icon</div>,

|

||||

IconAtom: () => <div data-testid="icon-atom">Atom Icon</div>,

|

||||

IconWorld: () => <div data-testid="icon-world">World Icon</div>,

|

||||

IconCodeCircle2: () => <div data-testid="icon-code">Code Icon</div>,

|

||||

}))

|

||||

|

||||

describe('Capabilities', () => {

|

||||

it('should render vision capability with eye icon', () => {

|

||||

render(<Capabilities capabilities={['vision']} />)

|

||||

|

||||

const eyeIcon = screen.getByTestId('icon-eye')

|

||||

expect(eyeIcon).toBeInTheDocument()

|

||||

})

|

||||

|

||||

it('should render tools capability with tool icon', () => {

|

||||

render(<Capabilities capabilities={['tools']} />)

|

||||

|

||||

const toolIcon = screen.getByTestId('icon-tool')

|

||||

expect(toolIcon).toBeInTheDocument()

|

||||

})

|

||||

|

||||

it('should render proactive capability with sparkles icon', () => {

|

||||

render(<Capabilities capabilities={['proactive']} />)

|

||||

|

||||

const sparklesIcon = screen.getByTestId('icon-sparkles')

|

||||

expect(sparklesIcon).toBeInTheDocument()

|

||||

})

|

||||

|

||||

it('should render reasoning capability with atom icon', () => {

|

||||

render(<Capabilities capabilities={['reasoning']} />)

|

||||

|

||||

const atomIcon = screen.getByTestId('icon-atom')

|

||||

expect(atomIcon).toBeInTheDocument()

|

||||

})

|

||||

|

||||

it('should render web_search capability with world icon', () => {

|

||||

render(<Capabilities capabilities={['web_search']} />)

|

||||

|

||||

const worldIcon = screen.getByTestId('icon-world')

|

||||

expect(worldIcon).toBeInTheDocument()

|

||||

})

|

||||

|

||||