Co-authored-by: Hien To <>

Jan

Jan is a free, source-available and fair code licensed AI Inference Platform. We help enterprises, small businesses and hobbyists to self-host AI on their own infrastructure efficiently, to protect their data, lower costs, and put powerful AI capabilities in the hands of users.

Features

- Web, Mobile and APIs

- LLMs and Generative Art models

- AI Catalog

- Model Installer

- User Management

- Support for Apple Silicon, CPU architectures

Installation

Pre-Requisites

-

Supported Operating Systems: This setup is only tested and supported on Linux, Macbook Docker Desktop (For mac m1, m2 remember to change Docker platform

export DOCKER_DEFAULT_PLATFORM=linux/amd64), or Windows Subsystem for Linux (WSL) with Docker. -

Docker: Make sure you have Docker installed on your machine. You can install Docker by following the instructions here.

-

Docker Compose: Make sure you also have Docker Compose installed. If not, follow the instructions here.

-

Clone the Repository: Make sure to clone the repository containing the

docker-compose.ymland pull the latest git submodules.git clone https://github.com/janhq/jan.git cd jan # Pull latest submodule git submodule update --init -

Environment Variables: You will need to set up several environment variables for services such as Keycloak and Postgres. You can place them in

.envfiles in the respective folders as shown in thedocker-compose.yml.cp sample.env .envService (Docker) env file Global env .env, just runcp sample.env .envKeycloak .envpresented in global env and initiate realm inconf/keycloak_conf/example-realm.jsonKeycloak PostgresDB .envpresented in global envjan-inference .envpresented in global envapp-backend (hasura) conf/sample.env_app-backendrefer from hereapp-backend PostgresDB conf/sample.env_app-backend-postgresweb-client conf/sample.env_web-client

Docker Compose

Jan offers an Docker Compose deployment that automates the setup process.

# Download models

# Runway SD 1.5

wget https://huggingface.co/runwayml/stable-diffusion-v1-5/resolve/main/v1-5-pruned-emaonly.safetensors -P jan-inference/sd/models

# Download LLM

wget https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML/resolve/main/llama-2-7b-chat.ggmlv3.q4_1.bin -P jan-inference/llm/models

Run the following command to start all the services defined in the docker-compose.yml

# Docker Compose up

docker compose up

To run in detached mode:

# Docker Compose up detached mode

docker compose up -d

| Service (Docker) | URL | Credential |

|---|---|---|

| Keycloak | http://localhost:8088 | Admin credentials are set via the environment variables KEYCLOAK_ADMIN and KEYCLOAK_ADMIN_PASSWORD |

| app-backend (hasura) | http://localhost:8080 | Admin credentials are set via the environment variables HASURA_GRAPHQL_ADMIN_SECRET in file conf/sample.env_app-backend |

| web-client | http://localhost:3000 | Users are signed up to keycloak, default created user is set via conf/keycloak_conf/example-realm.json on keycloak with username: username, password: password |

| llm service | http://localhost:8000 |

Usage

To get started with Jan, follow these steps:

- Install the platform as per the instructions above.

- Launch the web application via

http://localhost:3000. - Login with default user (username:

username, password:password) - Test the llm model with

chatgptsession

Developers

Architecture

- Architecture Diagram

Dependencies

- Keycloak Community (Apache-2.0)

- Hasura Community Edition (Apache-2.0)

Repo Structure

Jan is a monorepo that pulls in the following submodules

├── docker-compose.yml

├── mobile-client

├── web-client

├── app-backend

├── inference-backend

├── docs # Developer Docs

├── adrs # Architecture Decision Records

Live Demo

You can access the live demo at https://cloud.jan.ai.

Common Issues and Troubleshooting

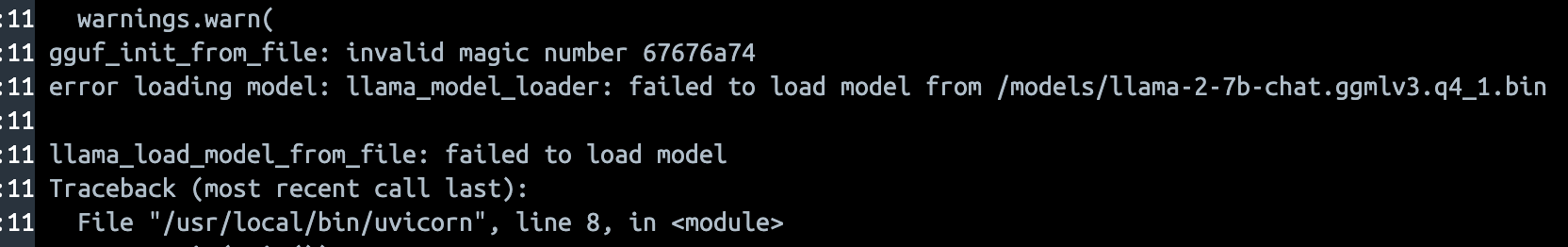

Error in jan-inference service

- Error: download model incomplete

- Solution:

- Manually download the LLM model using the URL specified in the environment variable

MODEL_URLwithin the.envfile. The URL is typically https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML/resolve/main/llama-2-7b-chat.ggmlv3.q4_1.bin - Copy the downloaded file

llama-2-7b-chat.ggmlv3.q4_1.binto the folderjan-inference/llm/models - Run

docker compose downfollowed bydocker compose up -dagain to restart the services.

- Manually download the LLM model using the URL specified in the environment variable

Contributing

Contributions are welcome! Please read the CONTRIBUTING.md file for guidelines on how to contribute to this project.

License

This project is licensed under the Fair Code License. See LICENSE.md for more details.

Authors and Acknowledgments

Created by jan. Thanks to all contributors who have helped to improve this project.

Support and Contact

For support or to report issues, please email support@jan.ai.